Algorithms And Mass Shootings: Holding Tech Companies Accountable

Table of Contents

The Role of Algorithms in Radicalization and the Spread of Extremist Ideologies

Algorithms, designed to maximize user engagement and advertising revenue, can unintentionally contribute to the spread of extremist ideologies. This happens through several key mechanisms.

Echo Chambers and Filter Bubbles

Algorithms designed to maximize engagement often create echo chambers and filter bubbles. These personalized online environments reinforce pre-existing beliefs and limit exposure to diverse perspectives.

- Personalized content feeds: These prioritize content aligning with user preferences, potentially leading users down rabbit holes of extremist ideologies. The more a user engages with extremist content, the more the algorithm feeds them similar material, creating a self-reinforcing cycle of radicalization.

- Lack of diversity in algorithms and datasets: Bias in the data used to train algorithms can lead to skewed recommendations, reinforcing harmful narratives and marginalizing counter-narratives. A lack of diverse perspectives in the design and development of algorithms also perpetuates this bias.

- Examples of algorithms: While specific algorithms used by social media platforms are proprietary, the general mechanisms of recommendation systems and personalized content feeds across various platforms contribute to the creation of echo chambers and filter bubbles, potentially exposing users to extremist content.

Algorithmic Amplification of Hate Speech and Misinformation

Algorithms often struggle to effectively identify and remove hate speech and misinformation. This failure allows harmful narratives to spread rapidly and reach a wider audience than ever before.

- Limitations of content moderation: Current content moderation techniques often rely on keyword filters and reactive measures, which are insufficient to address the sophisticated and evolving nature of hate speech and misinformation online.

- Speed of misinformation: Misinformation spreads at an alarming rate online, and algorithms accelerate this process. False or misleading information related to violence can easily incite hatred and potentially inspire violent acts.

- Case studies: Numerous examples exist where algorithms amplified hate speech related to mass shootings, contributing to a climate of fear and intolerance. Analyzing these case studies reveals the urgent need for improved algorithmic oversight.

The Connection Between Online Radicalization and Offline Violence

While not a direct cause-and-effect relationship, the connection between online radicalization and offline violence is undeniable. Algorithms play a significant role in facilitating this transition.

The Online-to-Offline Transition

The internet and social media platforms have become breeding grounds for extremist ideologies. Algorithms facilitate the transition from online radicalization to offline violence in several ways.

- Online communities and forums: These serve as spaces for extremist groups to recruit and radicalize new members, sharing violent rhetoric and strategies. Algorithms can connect individuals seeking like-minded communities with these harmful groups.

- Connecting isolated individuals: Algorithms can connect isolated individuals with extremist groups, providing them with a sense of belonging and validation that can reinforce their extremist beliefs. This can be particularly dangerous for individuals feeling alienated or marginalized.

- Examples of mass shooters: Several mass shootings have been linked to individuals who were radicalized online, demonstrating the real-world consequences of unchecked online extremism.

The Difficulty in Tracing the Causal Link

Establishing a direct causal link between algorithms and mass shootings is challenging. However, the correlation demands serious attention and investigation.

- Challenges of proving causality: Attributing specific acts of violence solely to algorithms is complex, as numerous social, psychological, and individual factors are involved. However, this complexity does not negate the role algorithms play in creating a permissive environment for extremism.

- Ethical considerations of assigning blame: Carefully considering the ethical implications of assigning blame to technology companies is crucial. While holding them accountable is necessary, it must be done responsibly and justly.

- Need for rigorous research: Further research is needed to better understand the intricate relationship between algorithms, online radicalization, and offline violence. This research should inform policy decisions and technological solutions.

Holding Tech Companies Accountable: Legal and Ethical Considerations

Holding tech companies accountable requires a multi-pronged approach, encompassing legal reform and a commitment from companies to prioritize safety and responsible innovation.

Legal Frameworks and Regulations

Current laws and regulations may need updating to address the unique challenges posed by algorithms and mass shootings.

- Potential legal avenues: Exploring legal avenues for holding tech companies accountable for their role in facilitating violence is crucial. This could include lawsuits based on negligence or complicity in the spread of harmful content.

- Section 230 of the Communications Decency Act: The relevance of Section 230 and potential modifications to provide better legal recourse against platforms that fail to adequately moderate harmful content needs careful consideration.

- Successful lawsuits: Examining successful lawsuits against tech companies for content moderation failures provides valuable precedents for future legal action.

Ethical Responsibilities of Tech Companies

Tech companies have an ethical responsibility to mitigate the risks associated with their algorithms.

- Transparency in algorithmic design: Greater transparency in the design and operation of algorithms is crucial to ensure accountability and allow for independent scrutiny.

- Improved content moderation: Investing in and developing more sophisticated AI for identifying and removing hate speech and misinformation is essential.

- Promoting responsible online behavior: Tech companies have a role in promoting media literacy and responsible online behavior among users, equipping them to navigate the complexities of the digital landscape.

Conclusion

The relationship between algorithms and mass shootings is complex but undeniable. While establishing direct causality is difficult, the correlation between algorithmic amplification of extremist ideologies and the occurrence of mass shootings demands urgent attention. Holding tech companies accountable requires a multi-faceted approach encompassing legal reform, ethical guidelines, and a commitment from tech companies to prioritize safety and responsible innovation. We must demand greater transparency and accountability from technology companies in their development and deployment of algorithms to prevent them from inadvertently contributing to violence. Let's work together to ensure algorithms are used responsibly and that tech companies play their part in addressing the issue of algorithms and mass shootings.

Featured Posts

-

Mining Meaning From Messy Data An Ai Podcast Project

May 30, 2025

Mining Meaning From Messy Data An Ai Podcast Project

May 30, 2025 -

Uerduen Uen Gazze Den Kanser Hastasi Cocuklara Saglik Hizmeti Sunumu

May 30, 2025

Uerduen Uen Gazze Den Kanser Hastasi Cocuklara Saglik Hizmeti Sunumu

May 30, 2025 -

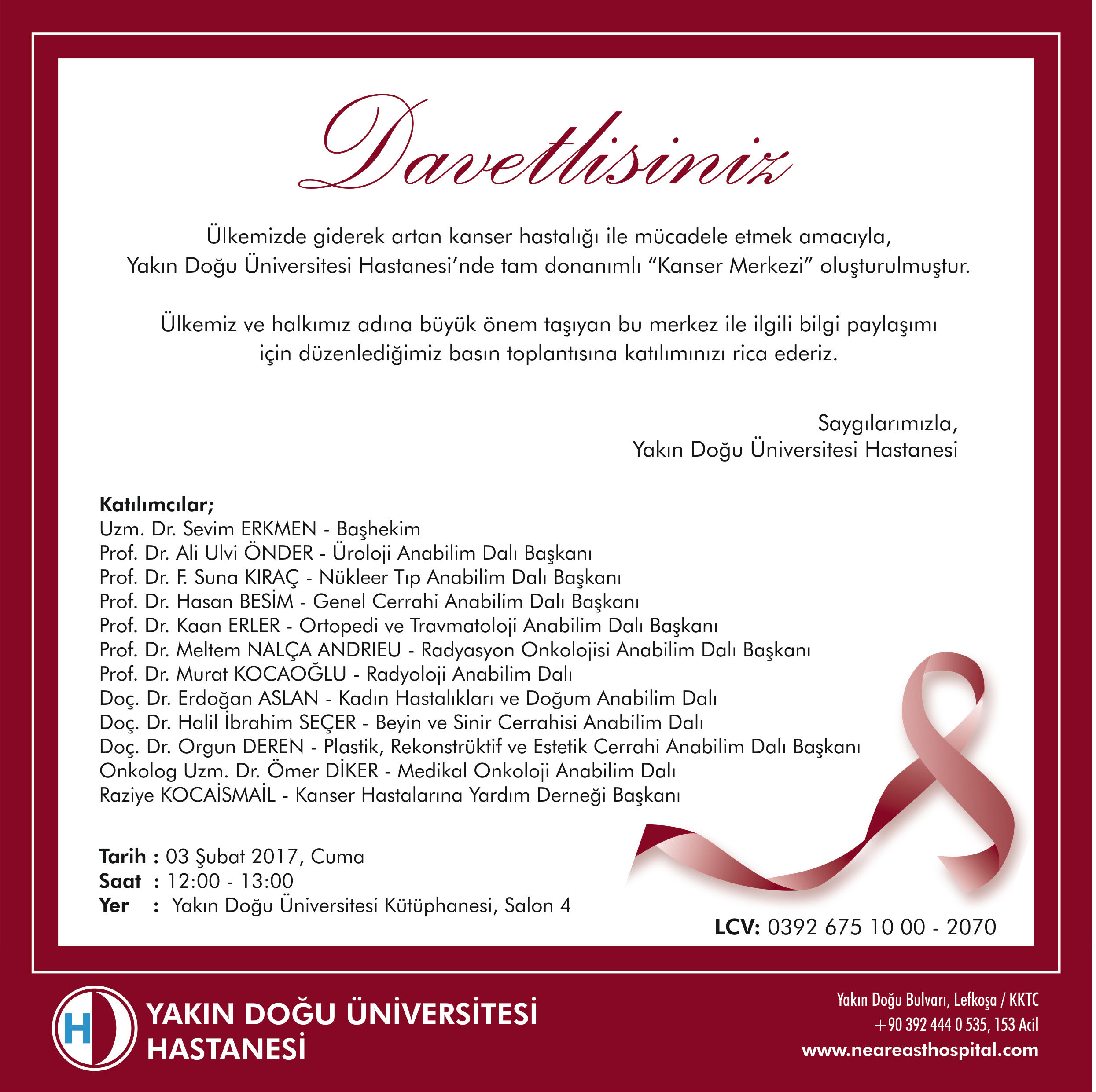

Red Tide Outbreak Emergency Warning Issued For Cape Cod

May 30, 2025

Red Tide Outbreak Emergency Warning Issued For Cape Cod

May 30, 2025 -

Exclusion De Marine Le Pen En 2027 Les Declarations Alarmantes De Laurent Jacobelli

May 30, 2025

Exclusion De Marine Le Pen En 2027 Les Declarations Alarmantes De Laurent Jacobelli

May 30, 2025 -

2025 Iowa State High School Track And Field Championships Complete Results May 5 22

May 30, 2025

2025 Iowa State High School Track And Field Championships Complete Results May 5 22

May 30, 2025

Latest Posts

-

Early Start To Fire Season In Canada And Minnesota What You Need To Know

May 31, 2025

Early Start To Fire Season In Canada And Minnesota What You Need To Know

May 31, 2025 -

Analyzing Team Victoriouss Chances At The Tour Of The Alps

May 31, 2025

Analyzing Team Victoriouss Chances At The Tour Of The Alps

May 31, 2025 -

Tour Of The Alps 2024 Team Victoriouss Bid For Success

May 31, 2025

Tour Of The Alps 2024 Team Victoriouss Bid For Success

May 31, 2025 -

Team Victoriouss Tour Of The Alps Strategy A Path To Victory

May 31, 2025

Team Victoriouss Tour Of The Alps Strategy A Path To Victory

May 31, 2025 -

Cycling Team Victorious Ready To Conquer The Tour Of The Alps

May 31, 2025

Cycling Team Victorious Ready To Conquer The Tour Of The Alps

May 31, 2025