Analyzing Thompson's Unlucky Monte Carlo Performance

Table of Contents

Investigating Algorithmic Choices

The success of any Monte Carlo simulation hinges critically on the chosen algorithms. Thompson employed a combination of Metropolis-Hastings and Markov Chain Monte Carlo (MCMC) methods. While these are powerful techniques, their effectiveness depends heavily on proper implementation and parameter tuning. The suitability of these algorithms for the specific problem Thompson tackled requires careful scrutiny. Potential weaknesses include:

-

Incorrect parameter settings leading to slow convergence: Improperly chosen parameters, such as the proposal distribution in Metropolis-Hastings, can lead to extremely slow convergence, resulting in inaccurate estimations even with extensive computation time. Optimal parameter selection often requires experimentation and careful monitoring of convergence diagnostics.

-

Inefficient sampling techniques resulting in high variance: MCMC methods aim to sample from a complex probability distribution. Inefficient sampling can lead to high variance in the estimated quantities, making the results unreliable. Techniques like Hamiltonian Monte Carlo (HMC) or No-U-Turn Sampler (NUTS) may have offered better performance in this specific case.

-

Potential bugs or errors in the implementation of the algorithms: Even minor coding errors can significantly impact the results of Monte Carlo simulations. Rigorous code testing and validation are crucial to ensure the algorithms are functioning correctly.

Data Quality and Preprocessing Impact

The quality of input data is paramount in Monte Carlo simulations. Even the most sophisticated algorithms will fail to produce reliable results if the underlying data is flawed. Thompson's data may have suffered from several issues:

-

Presence of outliers or missing values affecting results: Outliers can disproportionately influence the simulation's outcomes, leading to biased estimates. Similarly, missing values can introduce uncertainty and potentially systematic errors. Robust statistical methods capable of handling these issues should have been employed.

-

Insufficient data size leading to inaccurate estimates: Monte Carlo methods rely on the law of large numbers. Insufficient data can result in inaccurate estimates with large confidence intervals, undermining the reliability of the results. A power analysis prior to the simulation could have determined the required data size.

-

Bias in the data influencing the simulation outcomes: Bias in the input data will inevitably lead to biased results. Careful examination of data collection methods and potential sources of bias is crucial to ensure data integrity. Thompson should have applied appropriate data cleaning and preprocessing techniques, potentially including data transformations to mitigate bias.

Random Number Generator (RNG) Analysis

The Random Number Generator (RNG) is the heart of any Monte Carlo simulation. A flawed RNG can introduce systematic errors and affect the reproducibility of the results. Several aspects of Thompson's RNG require investigation:

-

Type of RNG used (e.g., Mersenne Twister, linear congruential generator): Different RNGs have varying properties in terms of period length, randomness, and computational efficiency. The choice of RNG should align with the specific demands of the simulation. High-quality, well-tested RNGs like the Mersenne Twister are generally recommended.

-

Seed selection and its influence on reproducibility: The seed used to initialize the RNG is crucial for reproducibility. A fixed seed ensures that the simulation can be replicated exactly, allowing for verification and validation. Thompson's approach to seed selection needs careful review.

-

Testing for randomness and independence of the generated numbers: It's vital to test the generated numbers for randomness and independence. Standard statistical tests, such as those for autocorrelation, should be applied to assess the quality of the RNG.

Statistical Significance and Error Analysis

A critical aspect of any Monte Carlo study is the assessment of statistical significance and the quantification of uncertainties. Thompson's analysis in this area may have been insufficient. Key aspects include:

-

Calculation of confidence intervals for key metrics: Confidence intervals provide a measure of uncertainty around the estimated values. These are essential for determining the reliability of the simulation results.

-

Use of hypothesis testing to assess the significance of findings: Hypothesis testing allows researchers to assess the statistical significance of observed effects. Thompson should have used appropriate statistical tests to determine if the results are statistically significant.

-

Analysis of variance and other relevant statistical measures: Analysis of variance (ANOVA) and other statistical measures are crucial for analyzing the variability in the results and identifying potential sources of error.

Understanding and Improving Thompson's Monte Carlo Performance

The underperformance of Thompson's Monte Carlo simulations likely stems from a combination of factors: suboptimal algorithmic choices, issues with data quality and preprocessing, potential RNG flaws, and inadequate statistical analysis. To improve future simulations, researchers should prioritize:

-

Careful algorithm selection and parameter tuning: Choose algorithms appropriate for the problem and carefully tune parameters to optimize convergence and reduce variance.

-

Rigorous data quality control and preprocessing: Thoroughly clean and preprocess data to remove outliers, handle missing values, and mitigate bias.

-

Use of high-quality, well-tested RNGs: Employ a robust RNG and test its properties for randomness and independence.

-

Comprehensive statistical analysis: Calculate confidence intervals, conduct hypothesis tests, and use appropriate statistical measures to assess the significance and uncertainty of the results.

By carefully considering algorithmic choices, data quality, RNG properties, and thorough statistical analysis, researchers can avoid similar pitfalls and improve the reliability of their Thompson's Monte Carlo Performance results. Apply these insights to your own work to ensure accurate and reliable simulations.

Featured Posts

-

Padel Courts Coming To Bannatyne Ingleby Barwick A Construction Update

May 31, 2025

Padel Courts Coming To Bannatyne Ingleby Barwick A Construction Update

May 31, 2025 -

Cheap Samsung Tablet 101 Deal Beats I Pad Price

May 31, 2025

Cheap Samsung Tablet 101 Deal Beats I Pad Price

May 31, 2025 -

Idojaras Jelentes Belfoeld Csapadek Toebb Hullamban

May 31, 2025

Idojaras Jelentes Belfoeld Csapadek Toebb Hullamban

May 31, 2025 -

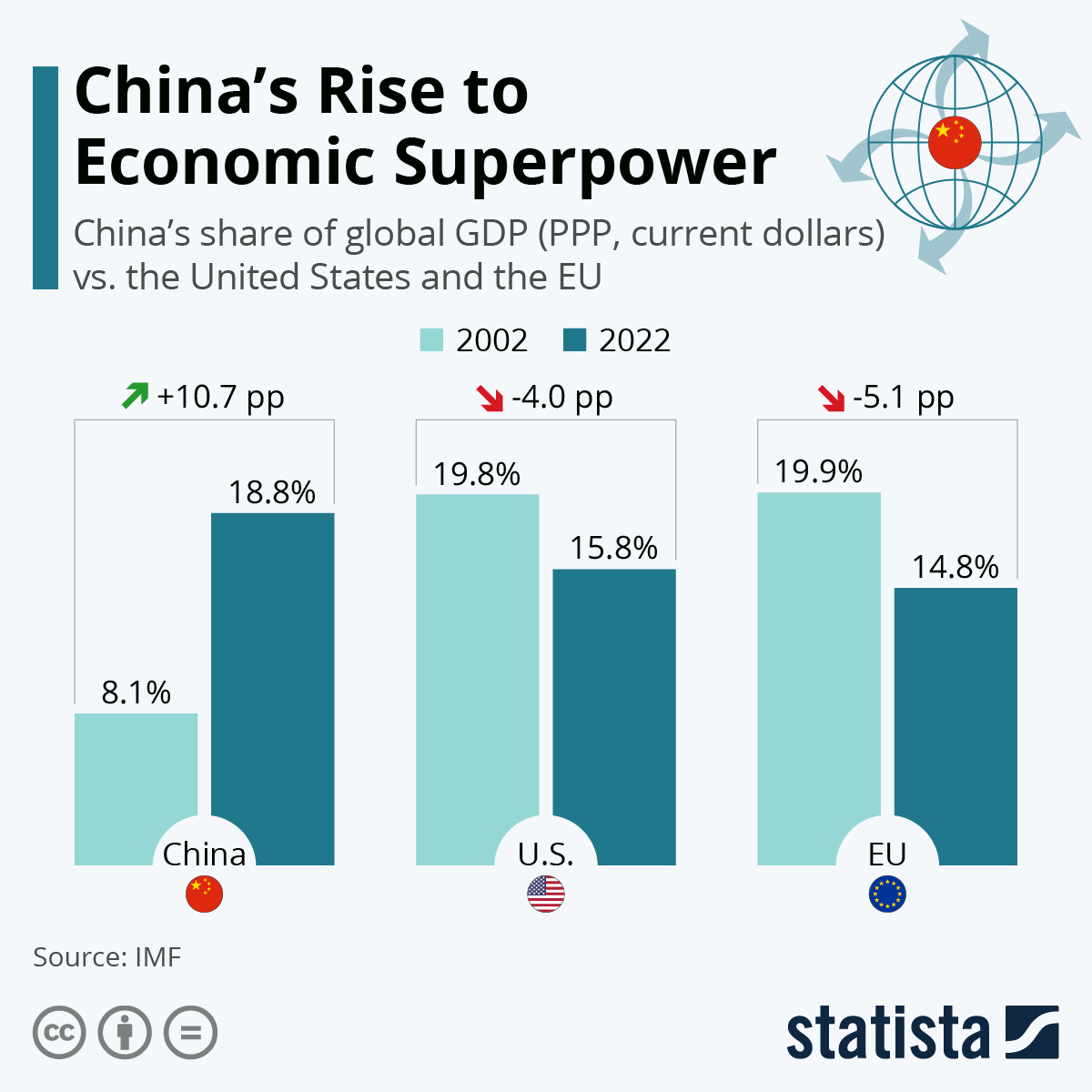

Americas Military Decline Chinas Rise And The Shifting Global Power Balance

May 31, 2025

Americas Military Decline Chinas Rise And The Shifting Global Power Balance

May 31, 2025 -

Strange Basement Find Baffles Plumber And Homeowner

May 31, 2025

Strange Basement Find Baffles Plumber And Homeowner

May 31, 2025