ChatGPT Creator OpenAI Under Federal Trade Commission Investigation

Table of Contents

The FTC's Allegations Against OpenAI

The Federal Trade Commission's investigation into OpenAI centers on several key concerns regarding its practices, potentially impacting the future of AI development and deployment.

Data Privacy Concerns

The FTC's primary concern revolves around OpenAI's data handling practices and potential violations of privacy laws. The sheer volume of user data collected to train ChatGPT raises significant questions about user consent and data security. Allegations suggest potential shortcomings in:

- Insufficient user consent: Concerns exist about whether users fully understand how their data is collected, used, and potentially shared. The complexity of AI models makes it challenging for users to grasp the full extent of data usage.

- Inadequate data security measures: The FTC may investigate whether OpenAI has implemented sufficient measures to protect user data from breaches and unauthorized access. Data breaches, even accidental ones, can have severe consequences for user privacy and trust.

- Lack of transparency in data usage: Questions remain on how OpenAI uses user data beyond training its models. Understanding the entire data lifecycle is crucial for maintaining trust and compliance with data protection regulations.

Bias and Misinformation Concerns

Another significant area of concern is the potential for bias in OpenAI's models and the resulting spread of misinformation. ChatGPT, while impressive, has demonstrated instances of:

- Algorithmic bias: The models can reflect biases present in the massive datasets they are trained on, leading to unfair or discriminatory outputs. This is a serious ethical concern, particularly when the outputs impact decisions with real-world consequences.

- Difficulty in detecting and mitigating misinformation: The model's ability to generate convincing yet false information raises concerns about its potential misuse for the spread of propaganda and disinformation. Mitigating this requires advanced detection and mitigation strategies.

- Lack of safeguards against harmful outputs: The FTC is likely scrutinizing whether OpenAI has adequate safeguards in place to prevent the generation of harmful, offensive, or illegal content.

Transparency and Accountability Concerns

The FTC's investigation likely includes a focus on OpenAI's lack of transparency regarding its algorithms and data handling processes. This lack of transparency hinders:

- Algorithmic transparency: Understanding how the model arrives at its conclusions is critical for identifying and addressing biases and errors. "Black box" AI systems make it difficult to ensure accountability and trustworthiness.

- Model explainability: The inability to understand the decision-making process of the model limits its use in high-stakes scenarios where transparency is essential. Explainable AI (XAI) is becoming crucial for building trust and regulatory compliance.

- Accountability mechanisms: The absence of clear mechanisms for addressing issues related to bias, misinformation, or data breaches raises concerns about OpenAI's overall accountability.

Potential Implications for OpenAI and the AI Industry

The FTC investigation carries significant implications for OpenAI and the broader AI landscape.

Financial Penalties

OpenAI faces potential substantial financial penalties, including significant FTC fines, if found in violation of consumer protection laws or data privacy regulations. These fines could significantly impact the company's financial stability and future development plans.

Reputational Damage

The investigation could severely damage OpenAI's brand reputation and erode public trust in its technology. Negative publicity can lead to decreased consumer confidence and hinder the adoption of OpenAI's products and services.

Impact on AI Development

The outcome of this investigation will likely have far-reaching consequences for the future of AI development and regulation. It could influence the development of stricter regulations for AI companies, shaping the regulatory landscape for years to come. This includes impacting responsible AI development practices and promoting ethical AI principles.

The Broader Context of AI Regulation

The OpenAI investigation is part of a growing global trend toward increased regulatory scrutiny of the AI industry.

Global AI Regulation Trends

Many countries are developing their own AI regulatory frameworks, such as the EU AI Act, reflecting a global recognition of the need for ethical and responsible AI development. These regulations often focus on data privacy, algorithmic transparency, and accountability.

The Need for Ethical AI Development

The OpenAI investigation underscores the critical importance of ethical AI development practices. Developing and deploying AI systems responsibly requires prioritizing user privacy, mitigating bias, ensuring transparency, and establishing clear accountability mechanisms.

Conclusion

The ChatGPT Creator OpenAI Under Federal Trade Commission Investigation highlights the growing need for responsible AI development and robust regulatory frameworks. The potential consequences for OpenAI – financial penalties, reputational damage, and impacts on AI development – are significant. This investigation serves as a crucial reminder that the development and deployment of AI technologies must prioritize ethical considerations and user privacy. Stay informed about the ChatGPT Creator OpenAI investigation, follow the latest news on AI regulation, and learn more about responsible AI development to ensure a future where AI benefits all of humanity.

Featured Posts

-

Homes Reduced To Ashes Eastern Newfoundland Battles Devastating Wildfires

May 31, 2025

Homes Reduced To Ashes Eastern Newfoundland Battles Devastating Wildfires

May 31, 2025 -

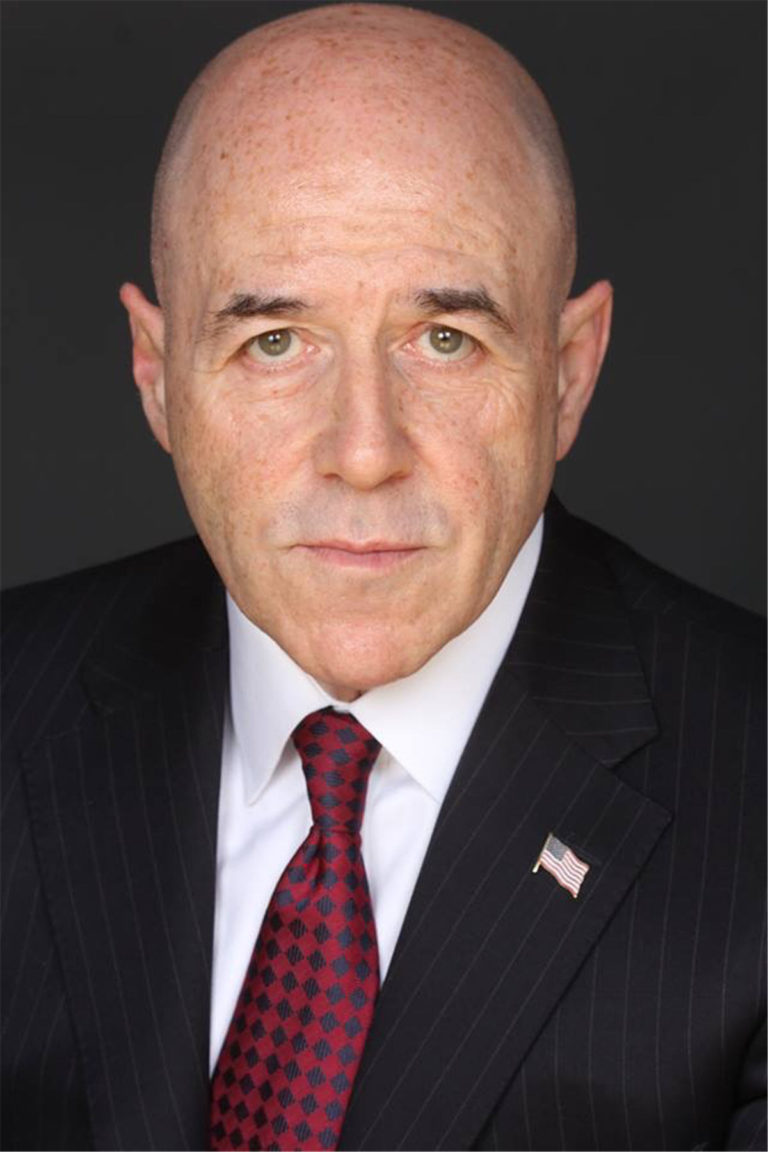

Bernard Kerik From 9 11 Hero To Convicted Felon

May 31, 2025

Bernard Kerik From 9 11 Hero To Convicted Felon

May 31, 2025 -

Achieving The Good Life Steps To Happiness And Fulfillment

May 31, 2025

Achieving The Good Life Steps To Happiness And Fulfillment

May 31, 2025 -

Rosemary And Thyme Essential Oils Aromatherapy And More

May 31, 2025

Rosemary And Thyme Essential Oils Aromatherapy And More

May 31, 2025 -

Rcn And Vet Nursing Collaboration A Plastic Glove Project Case Study

May 31, 2025

Rcn And Vet Nursing Collaboration A Plastic Glove Project Case Study

May 31, 2025