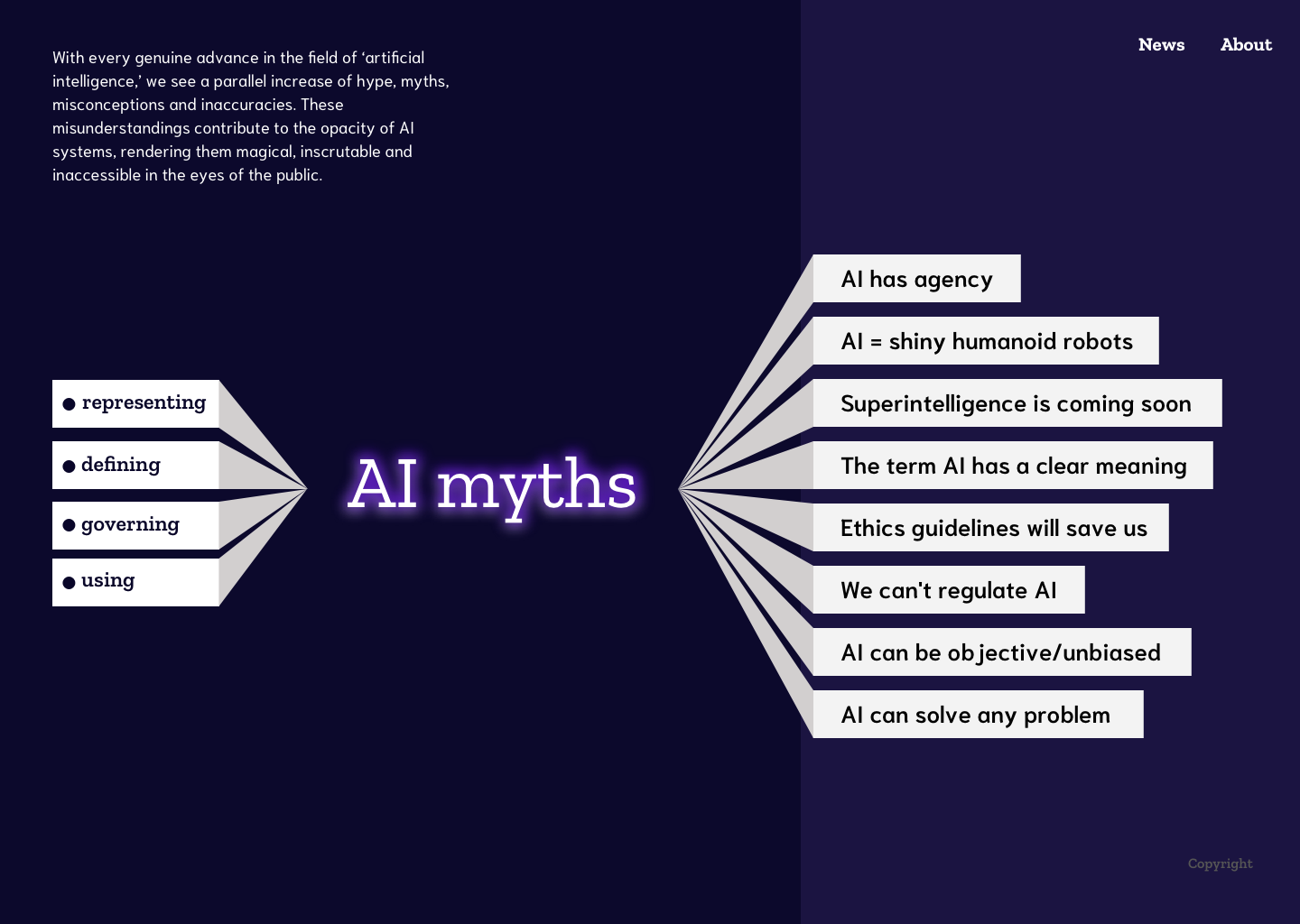

Debunking The Myth Of AI Learning: A Practical Guide To Ethical AI

Table of Contents

Understanding AI's Learning Limitations

Contrary to popular belief, AI doesn't "learn" in the same way humans do. Instead of genuine understanding, AI identifies patterns in vast datasets. This process, while powerful, is fundamentally limited and susceptible to biases present in the training data. This is a crucial aspect of ethical AI considerations.

-

AI learns from data, not understanding: AI algorithms excel at finding correlations and making predictions based on the data they are trained on. However, they lack the contextual understanding and common sense reasoning that humans possess. They operate based on statistical probabilities, not genuine comprehension.

-

The pervasive problem of data bias: Data bias significantly influences AI outcomes. Biased datasets, often reflecting existing societal inequalities, can lead to discriminatory or unfair AI systems.

- Examples of biased datasets: A facial recognition system trained primarily on images of light-skinned individuals may perform poorly on darker skin tones. A loan application AI trained on historical data might perpetuate existing biases against certain demographic groups.

- Algorithmic bias: Even with unbiased data, the algorithms themselves can introduce bias through their design or the way they process information. This algorithmic bias further exacerbates the problem, demanding careful consideration in ethical AI development.

-

Generalization and reasoning limitations: Current AI struggles with generalization and reasoning beyond the specific data it was trained on. This means AI can fail spectacularly in unexpected situations or when confronted with novel scenarios. Human oversight and intervention remain essential to ensure reliable and safe AI operation.

- Examples of AI failure: Self-driving cars may misinterpret unusual road conditions, leading to accidents. Medical diagnosis AI might misdiagnose a patient presenting with symptoms outside its training data.

- The necessity of human-in-the-loop systems: Ethical AI demands human oversight to validate AI decisions, particularly in high-stakes situations. This human-in-the-loop approach helps mitigate risks and ensures responsible use of AI.

The Importance of Data Privacy and Security in Ethical AI

The ethical development of AI is inextricably linked to data privacy and security. Collecting and using personal data for AI training raises significant ethical concerns. Responsible AI necessitates stringent measures to protect individual privacy and prevent data misuse.

-

Ethical implications of data collection: The use of personal data for AI training raises concerns about surveillance, profiling, and discrimination. Regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) aim to protect individuals' data rights.

-

Risks of data breaches and misuse: Data breaches can expose sensitive personal information, leading to identity theft, financial loss, and reputational damage. The misuse of data for malicious purposes, such as targeted advertising or manipulation, also poses a significant threat.

-

Data anonymization and encryption: Employing strong data anonymization techniques and robust encryption methods are crucial for safeguarding sensitive information used in AI training.

- Best practices: Data minimization (collecting only necessary data), differential privacy (adding noise to data to protect individual identities), and federated learning (training AI models on decentralized data without directly sharing the data) are examples of best practices.

-

Transparency in data usage and algorithms: Transparency in how data is used and how AI algorithms work is crucial for building trust and accountability. Openness regarding data sources and algorithmic processes allows for scrutiny and helps identify potential biases.

Addressing Bias and Promoting Fairness in AI

Mitigating bias and promoting fairness are paramount in ethical AI. This requires a multi-faceted approach addressing both data and algorithms.

-

Techniques for mitigating bias: Data augmentation (adding more data to underrepresented groups), re-weighting data points, and developing fairness-aware algorithms are some methods used to reduce bias in datasets and algorithms.

-

Explainable AI (XAI): XAI aims to make AI decision-making processes more transparent and understandable. By explaining how an AI arrives at a particular conclusion, we can identify and address potential biases and ensure fairness.

-

Diverse and inclusive teams: Building diverse and inclusive teams in AI development is crucial for identifying and addressing biases embedded in data and algorithms. Diverse perspectives help ensure that AI systems are fair and equitable for everyone.

Accountability and Transparency in Ethical AI Development

Establishing accountability and transparency in AI development is challenging but crucial for ethical AI. It's vital to understand who is responsible for the decisions made by AI systems and to ensure that these systems can be audited.

-

Challenges of assigning responsibility: Determining accountability when AI systems make errors or cause harm is complex. The roles of developers, deployers, and users need to be clearly defined.

-

Auditable AI systems: Developing auditable AI systems that allow for tracing and tracking AI decisions is essential for identifying and rectifying errors or biases.

- Techniques for tracking and tracing: Maintaining detailed logs of AI decisions, employing version control for algorithms, and using explainable AI techniques are some methods for achieving auditability.

-

Guidelines and regulations: Clear guidelines and regulations for AI development and deployment are necessary to ensure responsible and ethical AI practices. These regulations should address data privacy, algorithmic bias, and accountability.

Building a Future with Responsible AI

Building a future with responsible AI requires a collaborative effort involving researchers, developers, policymakers, and the public. Education and awareness are key to promoting ethical AI principles and ensuring that AI benefits all of humanity.

-

The role of education and awareness: Educating the public and professionals about the ethical implications of AI is crucial for fostering responsible AI development and use.

-

Potential societal benefits of ethical AI: Ethical AI can contribute to solving critical societal challenges in healthcare, education, environmental protection, and more.

-

Collaboration for responsible AI: Collaboration between researchers, developers, policymakers, and the public is essential for establishing ethical guidelines and regulations for AI development and deployment.

Conclusion: Embracing Ethical AI for a Better Future

This guide has highlighted the limitations of AI learning and emphasized the critical role of ethical considerations in AI development. We've explored the importance of data privacy, algorithmic fairness, accountability, and transparency in creating responsible AI. By understanding and embracing the principles of ethical AI, we can harness the power of AI for good and build a more equitable and just future. Let's work together to create a world where AI serves humanity ethically and responsibly. Learn more about ethical AI development today!

Featured Posts

-

Isabelle Autissier Contre La Division Sur Les Questions Environnementales

May 31, 2025

Isabelle Autissier Contre La Division Sur Les Questions Environnementales

May 31, 2025 -

New Report Provincial Policies Key To Accelerating Home Construction

May 31, 2025

New Report Provincial Policies Key To Accelerating Home Construction

May 31, 2025 -

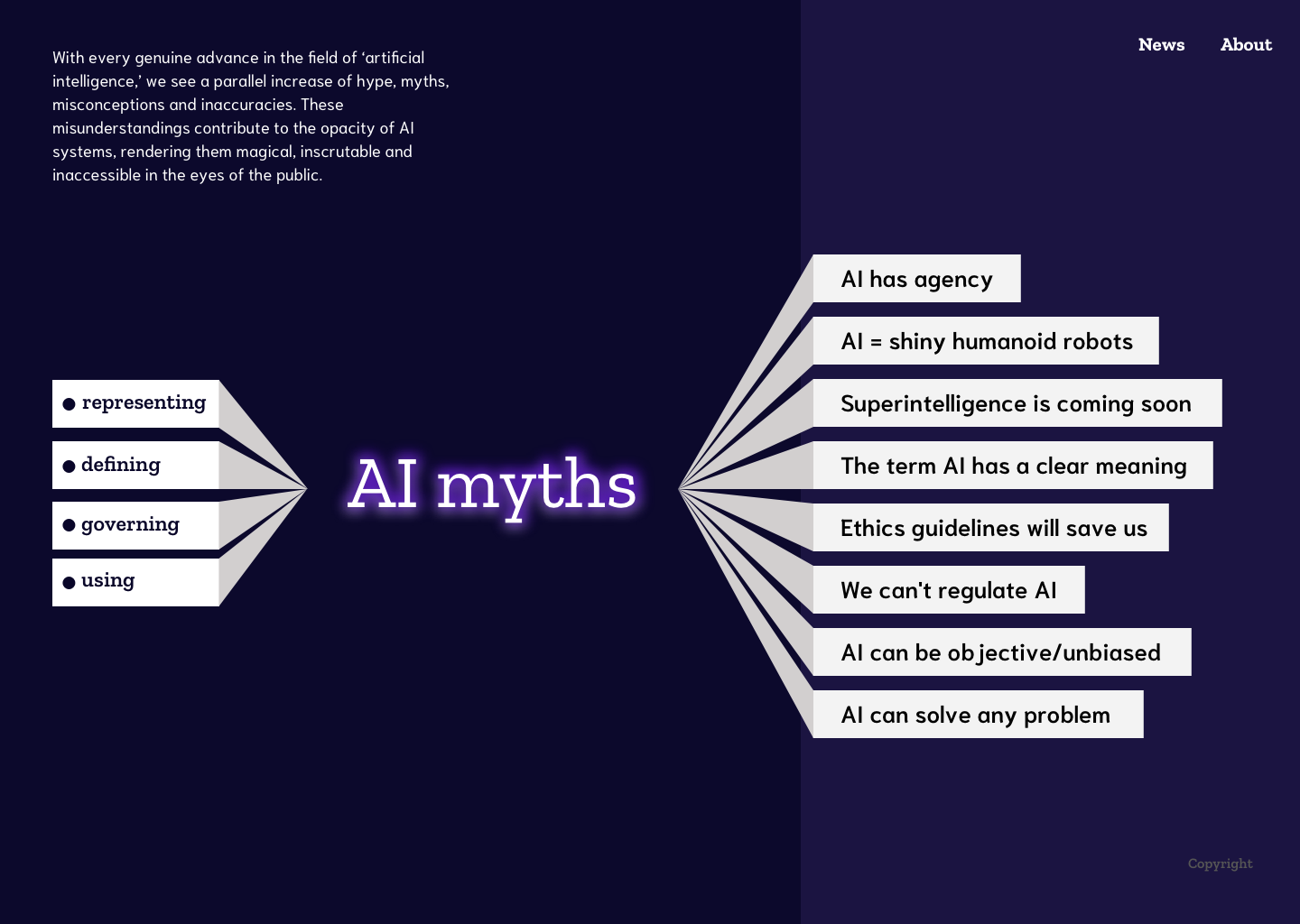

Best Summer Reads 30 Critic Approved Books

May 31, 2025

Best Summer Reads 30 Critic Approved Books

May 31, 2025 -

Update Fierce Wildfires In Eastern Manitoba

May 31, 2025

Update Fierce Wildfires In Eastern Manitoba

May 31, 2025 -

Friday Night Baseball Tigers Face Twins To Start Road Trip

May 31, 2025

Friday Night Baseball Tigers Face Twins To Start Road Trip

May 31, 2025