Is AI Therapy A Surveillance Tool In A Police State?

Table of Contents

Data Collection and Privacy Concerns in AI Therapy

The convenience of AI therapy comes at a cost: extensive data collection. AI therapy platforms gather vast amounts of personal information, raising significant data privacy concerns. Understanding the scope of this data collection is crucial to assessing the potential risks.

The Scope of Data Collection

AI therapy platforms collect diverse data types, including:

- Voice recordings: Detailed recordings of therapy sessions, capturing nuances of speech and tone.

- Text messages: Written communications between the user and the AI, revealing personal thoughts, feelings, and experiences.

- Location data: Tracking the user's location, potentially revealing sensitive information about their daily routines and movements.

- Biometric data: Data collected from wearable devices, such as heart rate and sleep patterns, offering insights into the user's physical and emotional state.

This data, seemingly innocuous in isolation, can be used to create comprehensive psychological profiles of individuals. The lack of transparency regarding data usage and storage is deeply unsettling. Users often lack clear understanding of how their data is being used, stored, and protected. The potential for data breaches is significant, with the consequences of unauthorized access to sensitive mental health data potentially devastating. This vulnerability highlights the critical need for robust data security measures and transparent data handling policies within the AI therapy sector. The sensitive nature of mental health data requires a far higher level of protection than currently exists.

Potential for Misuse by Law Enforcement

The extensive data collected by AI therapy platforms presents a significant vulnerability to government surveillance. The potential for misuse by law enforcement agencies is a serious concern.

Government Access to Data

Several scenarios illustrate how government agencies might gain access to AI therapy data:

- Warrantless access: Backdoors or data-sharing agreements could allow government agencies to access data without proper warrants or judicial oversight.

- Targeted surveillance: AI therapy data could be used to identify and target individuals deemed "at risk," potentially based on subjective criteria or biased algorithms. This raises serious concerns about the potential for abuse and disproportionate targeting of vulnerable populations.

- Lack of regulation: The absence of comprehensive regulations specifically protecting users from state surveillance through AI therapy platforms leaves individuals exposed to potential abuses of power.

The intersection of AI therapy and national security concerns necessitates a robust legal framework to prevent the unchecked access of law enforcement to sensitive mental health data. The potential for misuse necessitates rigorous oversight and accountability.

The Algorithmic Bias Problem in AI Therapy

AI therapy platforms are not immune to the pervasive issue of algorithmic bias. The algorithms powering these tools are trained on datasets that may reflect existing societal biases, leading to discriminatory outcomes.

Bias in Algorithms and Data Sets

Biases in algorithms and training data can negatively impact certain demographic groups. For example, an AI therapy tool trained primarily on data from a specific cultural background may not effectively address the needs of users from diverse backgrounds.

- Lack of diversity: The lack of diversity in the development and testing of AI therapy tools exacerbates the problem, leading to algorithms that perpetuate existing societal inequalities.

- Perpetuating inequalities: Biased algorithms can reinforce harmful stereotypes and contribute to the marginalization of already vulnerable communities.

Addressing algorithmic bias requires a multi-faceted approach, including careful data curation, diverse development teams, and rigorous testing to ensure fairness and inclusivity. The pursuit of "inclusive AI" in mental health is paramount.

The Lack of Regulation and Oversight

The current regulatory landscape surrounding AI therapy is inadequate to protect user privacy. The absence of comprehensive data protection laws specifically addressing AI-powered mental health tools creates a significant vulnerability.

The Need for Stronger Privacy Laws

Stronger data protection laws are urgently needed:

- Data protection specific to AI mental health: Laws should address the unique privacy challenges posed by the collection and use of mental health data by AI systems.

- Independent audits and oversight: Independent audits and oversight of AI therapy platforms are crucial to ensure compliance with data protection regulations and ethical standards.

- Greater user control: Users should have greater control over their data, including the ability to access, correct, and delete their information.

The accountability of AI therapy providers must be strengthened through regulatory frameworks that prioritize user privacy and data security.

Conclusion

The potential use of AI therapy as a surveillance tool in a police state presents significant dangers to privacy, data security, and individual freedoms. The extensive data collection practices, potential for misuse by law enforcement, algorithmic biases, and the lack of adequate regulation create a recipe for disaster. We need increased awareness, stricter regulations, and ethical guidelines to prevent the misuse of this technology. We urge readers to advocate for stronger data protection laws, greater transparency in the development and deployment of AI-powered mental health tools, and to research and understand the privacy policies of any AI therapy apps they use. Protecting mental health data is crucial; we must prevent its use as a tool of surveillance. The future of AI therapy hinges on prioritizing responsible development and deployment, ensuring that this powerful technology serves to improve mental well-being without compromising fundamental rights. We must demand responsible AI therapy, safeguarding privacy and preventing the chilling effects of AI mental health surveillance.

Featured Posts

-

Up To 40 Off Nike Air Dunks Jordans And More Sneakers At Foot Locker

May 15, 2025

Up To 40 Off Nike Air Dunks Jordans And More Sneakers At Foot Locker

May 15, 2025 -

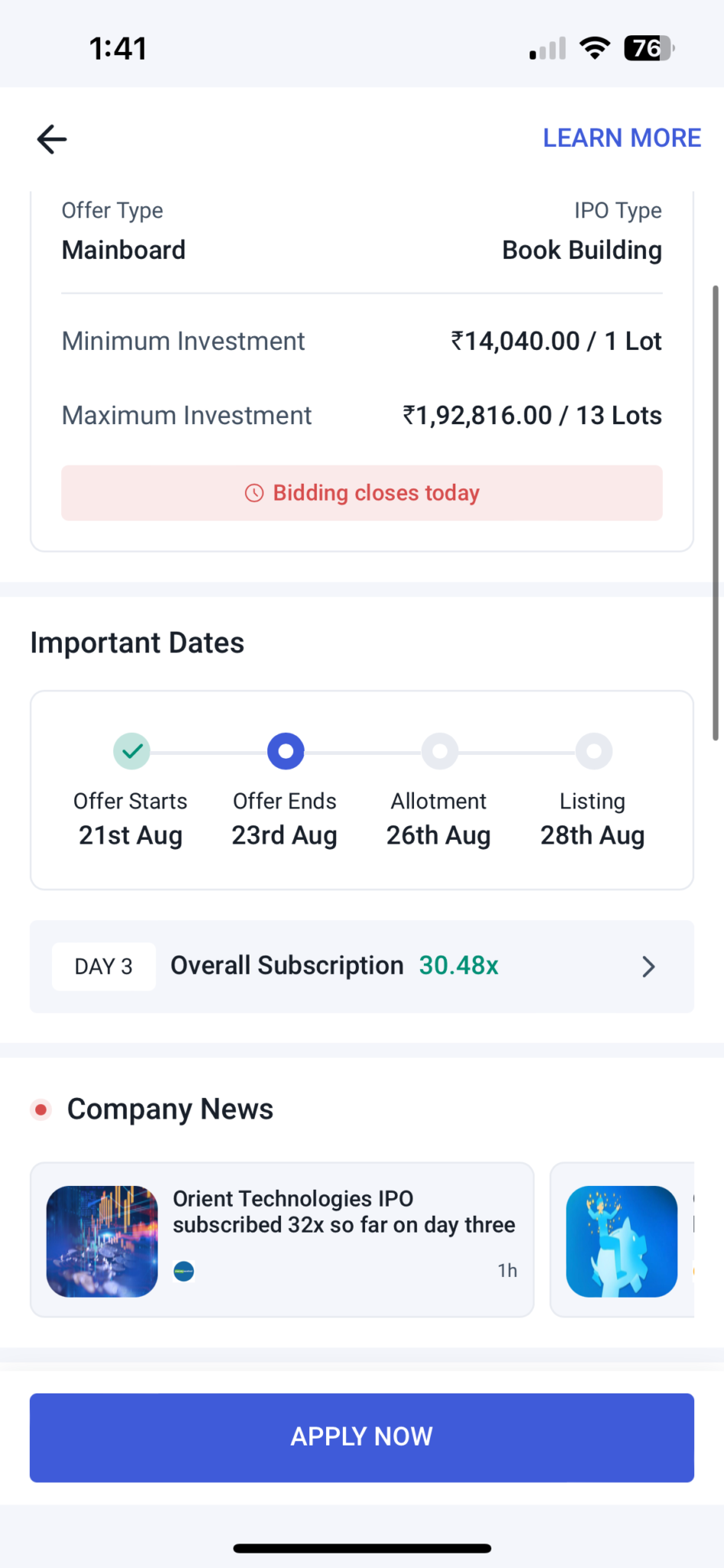

Understanding Jm Financials R400 Target For Baazar Style Retail

May 15, 2025

Understanding Jm Financials R400 Target For Baazar Style Retail

May 15, 2025 -

Jalen Brunsons Return Knicks Pistons Playoff Push

May 15, 2025

Jalen Brunsons Return Knicks Pistons Playoff Push

May 15, 2025 -

Australias Election Comparing The Policy Pitfalls Of Albanese And Dutton

May 15, 2025

Australias Election Comparing The Policy Pitfalls Of Albanese And Dutton

May 15, 2025 -

Lindts Central London Chocolate Paradise A Closer Look

May 15, 2025

Lindts Central London Chocolate Paradise A Closer Look

May 15, 2025