Navigating The New CNIL AI Guidelines: A Practical Approach

Table of Contents

Understanding the Core Principles of the CNIL AI Guidelines

The CNIL AI guidelines are built upon a foundation of key principles that emphasize human-centric AI development. These principles aim to balance innovation with the protection of fundamental rights. Understanding these principles is paramount for compliance. They include:

- Principle of human oversight: AI systems should always be under human control, with humans retaining ultimate responsibility for decisions and actions. This means establishing clear lines of accountability and ensuring human intervention mechanisms are in place.

- Principle of fairness and non-discrimination: AI systems must be designed and implemented to avoid bias and ensure fair treatment for all individuals, regardless of their background or characteristics. This requires careful consideration of data selection, algorithm design, and ongoing monitoring for discriminatory outcomes. Techniques like fairness-aware machine learning are crucial here.

- Principle of transparency and explainability: Users should have a clear understanding of how AI systems work and the factors influencing their decisions. This promotes trust and accountability, allowing for scrutiny and redress in case of adverse outcomes. Explainable AI (XAI) techniques are vital for meeting this requirement.

- Principle of data security and protection: AI systems often rely on vast amounts of personal data. The CNIL guidelines emphasize robust security measures to protect this data from unauthorized access, use, disclosure, alteration, or destruction. This includes implementing appropriate technical and organizational measures as outlined in the GDPR.

- Principle of accountability: Organizations are responsible for demonstrating compliance with the CNIL AI guidelines. This includes maintaining detailed records of AI system development, deployment, and operation, as well as implementing effective mechanisms for addressing complaints and grievances.

Impact on Data Collection and Processing

The CNIL AI guidelines significantly impact data collection and processing practices. Organizations must ensure their AI-related data handling complies with data protection principles, primarily those enshrined within the GDPR. This includes:

- Informed consent: Obtaining explicit, informed consent for AI-related data processing is crucial. This means providing clear, concise, and easily understandable information about how data will be used for AI purposes.

- Data minimization: Collect only the data strictly necessary for the specific AI application. Avoid excessive or unnecessary data collection.

- Purpose limitation: Clearly define the legitimate processing purposes for AI applications and ensure data is used only for those stated purposes. Any change of purpose requires fresh consent.

- Data quality and accuracy: Implement procedures to ensure the accuracy and quality of data used to train and operate AI systems. Inaccurate data can lead to biased and unreliable outcomes.

Implementing Algorithmic Transparency and Explainability

Algorithmic transparency and explainability are central to the CNIL AI guidelines. Organizations must strive to make the decision-making processes of their AI systems understandable and accessible. This involves:

- Documentation: Maintain comprehensive documentation of AI algorithms, including design choices, data sources, and testing procedures. This documentation should be accessible to relevant stakeholders, including users and regulators.

- User explanations: Provide users with clear and understandable explanations of AI-driven outcomes. This might involve simplified summaries or visual representations of the decision-making process.

- Bias mitigation: Implement methods to identify and mitigate biases in algorithms. This involves careful data selection, algorithm design, and ongoing monitoring for discriminatory outcomes.

- Tools and technologies: Leverage tools and technologies to support algorithmic transparency, such as XAI techniques and model interpretability methods.

Addressing Risk Management and Compliance

Effective risk management is crucial for complying with the CNIL AI guidelines. Organizations should proactively identify, assess, and mitigate potential risks associated with their AI systems. This includes:

- DPIAs: Conduct Data Protection Impact Assessments (DPIAs) for high-risk AI systems to identify and address potential risks to individuals' rights and freedoms.

- DPO role: The Data Protection Officer (DPO) plays a key role in ensuring compliance, advising on data protection matters, and monitoring compliance with the CNIL AI guidelines and the GDPR.

- Data security measures: Implement robust data security measures to protect personal data used in AI systems, complying with GDPR Article 32.

- Incident handling: Develop procedures for handling data breaches and incidents related to AI, including notification procedures to the CNIL and affected individuals.

Staying Ahead of the Curve: Future Developments and Best Practices

The landscape of AI regulation is constantly evolving. To maintain compliance, organizations need a proactive approach:

- Regulatory updates: Stay informed about upcoming changes and updates to the CNIL AI guidelines and other relevant regulations.

- Compliance program: Implement a robust compliance program that anticipates future regulations and allows for adaptation to evolving best practices.

- Industry engagement: Participate in industry best practice groups and engage with the CNIL to contribute to the development of responsible AI practices.

- Employee training: Invest in AI ethics training for employees to foster a culture of responsible AI development and deployment.

Conclusion

Navigating the CNIL AI guidelines requires a comprehensive understanding of the core principles, a commitment to ethical AI practices, and a proactive approach to risk management and compliance. By implementing the steps outlined in this article, organizations can ensure their AI systems are compliant with the CNIL AI guidelines, mitigating potential risks and fostering trust with customers and stakeholders. Don't wait for a regulatory infraction; take proactive steps today to ensure your AI initiatives are compliant. For further assistance in navigating the CNIL AI regulations and ensuring compliance, consult with legal and data protection experts. Remember, responsible AI development and deployment are crucial for success in the evolving digital landscape.

Featured Posts

-

Superboul 2025 Polniy Obzor Vystupleniy Zvezd

Apr 30, 2025

Superboul 2025 Polniy Obzor Vystupleniy Zvezd

Apr 30, 2025 -

Analyse Du Communique Amf Seb Sa Reference Cp 2025 E1021792

Apr 30, 2025

Analyse Du Communique Amf Seb Sa Reference Cp 2025 E1021792

Apr 30, 2025 -

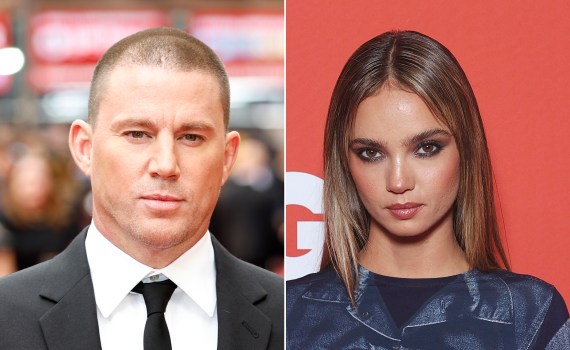

Channing Tatum And Inka Williams A Detailed Look At Their Romance

Apr 30, 2025

Channing Tatum And Inka Williams A Detailed Look At Their Romance

Apr 30, 2025 -

Channing Tatum 44 And Inka Williams 25 Enjoy A Shopping Trip In West Hollywood

Apr 30, 2025

Channing Tatum 44 And Inka Williams 25 Enjoy A Shopping Trip In West Hollywood

Apr 30, 2025 -

Our Yorkshire Farm Reuben Owen Opens Up About His Childhood Challenges

Apr 30, 2025

Our Yorkshire Farm Reuben Owen Opens Up About His Childhood Challenges

Apr 30, 2025

Latest Posts

-

Channing Tatum Confirms Romance With Inka Williams Following Zoe Kravitz Breakup

Apr 30, 2025

Channing Tatum Confirms Romance With Inka Williams Following Zoe Kravitz Breakup

Apr 30, 2025 -

Channing Tatum And Inka Williams A New Relationship Blossoms

Apr 30, 2025

Channing Tatum And Inka Williams A New Relationship Blossoms

Apr 30, 2025 -

Channing Tatum Moves On Pda With Inka Williams After Zoe Kravitz Split

Apr 30, 2025

Channing Tatum Moves On Pda With Inka Williams After Zoe Kravitz Split

Apr 30, 2025 -

Channing Tatums New Relationship Is Inka Williams The One

Apr 30, 2025

Channing Tatums New Relationship Is Inka Williams The One

Apr 30, 2025 -

Schneider Electric And Vignan Universitys Center Of Excellence Enhancing Education And Skills In Vijayawada

Apr 30, 2025

Schneider Electric And Vignan Universitys Center Of Excellence Enhancing Education And Skills In Vijayawada

Apr 30, 2025