OpenAI Facing FTC Investigation: A Deep Dive Into ChatGPT's Potential Risks

Table of Contents

FTC's Concerns Regarding ChatGPT's Data Practices

The FTC's investigation likely centers on OpenAI's data handling practices, raising significant concerns about user privacy and potential regulatory violations.

Data Privacy Violations

ChatGPT's functionality relies on vast amounts of user data, raising several potential privacy violations:

- Insufficient User Consent: Did OpenAI obtain truly informed consent from users regarding the collection and use of their data for training and operational purposes? Many users may not fully understand the extent to which their interactions are being used.

- Inadequate Data Security Measures: The sheer volume of data processed by ChatGPT necessitates robust security measures to prevent breaches and unauthorized access. Any weaknesses could expose sensitive personal information.

- Potential for Unauthorized Data Collection: Does ChatGPT collect data beyond what is explicitly disclosed to users? Concerns exist about the potential for unintentional or even deliberate collection of sensitive information.

These potential violations are subject to scrutiny under existing data privacy regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. Non-compliance could result in substantial fines and reputational damage for OpenAI. Examples of sensitive data potentially collected include health information, financial details, or even personally identifiable information inadvertently revealed in user prompts. Legal precedents from other data privacy cases will likely inform the FTC's investigation and potential actions.

Algorithmic Bias and Discrimination

Another critical concern is the potential for algorithmic bias in ChatGPT. The model's training data, drawn from vast online sources, may reflect and amplify existing societal biases:

- Gender Bias: ChatGPT's responses might exhibit gender stereotypes, portraying certain professions or characteristics as more associated with one gender than another.

- Racial Bias: Similar biases can manifest in relation to race and ethnicity, perpetuating harmful stereotypes and discriminatory outcomes.

- Religious Bias: The model might exhibit biases related to religious beliefs, reflecting prejudices present in the training data.

The ethical implications of biased AI are profound, potentially leading to unfair or discriminatory outcomes in various applications. Mitigating bias requires careful curation of training data, rigorous testing for bias, and ongoing monitoring of the model's output. Techniques like adversarial training and fairness-aware algorithms can help to reduce but not eliminate these biases.

ChatGPT's Potential for Misinformation and Malicious Use

ChatGPT's ability to generate human-quality text also presents significant risks:

Spread of Misinformation

ChatGPT can easily be used to create convincing but entirely false information:

- Fake News Generation: The model can generate realistic-sounding news articles or social media posts containing fabricated information.

- Impersonation: Malicious actors could use ChatGPT to impersonate individuals, spreading false statements or damaging reputations.

Detecting AI-generated misinformation is a significant challenge, requiring advanced detection methods and fact-checking initiatives. Improved fact-checking mechanisms, coupled with media literacy education, are crucial to combating this threat.

Cybersecurity Risks

The capabilities of ChatGPT also pose cybersecurity risks:

- Phishing Scams: ChatGPT can create highly convincing phishing emails or messages, making them difficult to identify.

- Malware Creation: While not directly designed for this purpose, the model could potentially assist in generating malicious code.

- Adversarial Attacks: The model itself might be vulnerable to adversarial attacks, where carefully crafted inputs could manipulate its responses for malicious purposes.

Robust safeguards are crucial to prevent malicious use, including techniques to detect and filter harmful outputs, as well as ongoing monitoring and refinement of the model's security architecture. Real-world examples of AI-powered phishing attacks already demonstrate the potential severity of these risks.

The Broader Implications for AI Regulation

The FTC's investigation into OpenAI has significant implications for the future of AI regulation:

The Need for Responsible AI Development

The investigation underscores the critical need for ethical considerations in AI development:

- Transparency: OpenAI and other AI developers must be transparent about their data collection practices, model limitations, and potential biases.

- Accountability: Mechanisms are needed to hold AI developers accountable for the harms caused by their technologies.

- Human Oversight: Human oversight is crucial to ensure responsible use and prevent unintended consequences.

Industry standards and regulatory frameworks are essential to guide responsible AI development, promoting ethical practices and minimizing risks.

The Future of AI Regulation

The FTC investigation may significantly shape future AI regulation:

- Risk-Based Regulation: Regulations might focus on higher-risk applications of AI, requiring stricter oversight and safety measures.

- Self-Regulation: The industry might be encouraged to adopt self-regulatory measures, complemented by government oversight.

- International Collaboration: Global collaboration is crucial to establish consistent and effective AI regulations.

Conclusion

The FTC's investigation into OpenAI and ChatGPT underscores the critical need for responsible AI development and robust regulation. The potential risks associated with ChatGPT, including data privacy violations, algorithmic bias, and the potential for malicious use, highlight the urgency of addressing these concerns. Moving forward, proactive measures are needed to ensure the ethical and safe development and deployment of AI technologies like ChatGPT. We must prioritize user privacy, mitigate bias, and implement safeguards to prevent misuse. Ignoring these risks could have significant consequences for individuals and society. The future of AI hinges on responsible innovation; let's demand accountability and transparency to ensure that AI serves humanity effectively and ethically. Stay informed about the developments in the OpenAI FTC investigation and the ongoing conversation around responsible AI development. Understanding the risks associated with ChatGPT and advocating for responsible AI is crucial for shaping a safer future with artificial intelligence.

Featured Posts

-

Oakland A S Muncys Impact On The Lineup

May 16, 2025

Oakland A S Muncys Impact On The Lineup

May 16, 2025 -

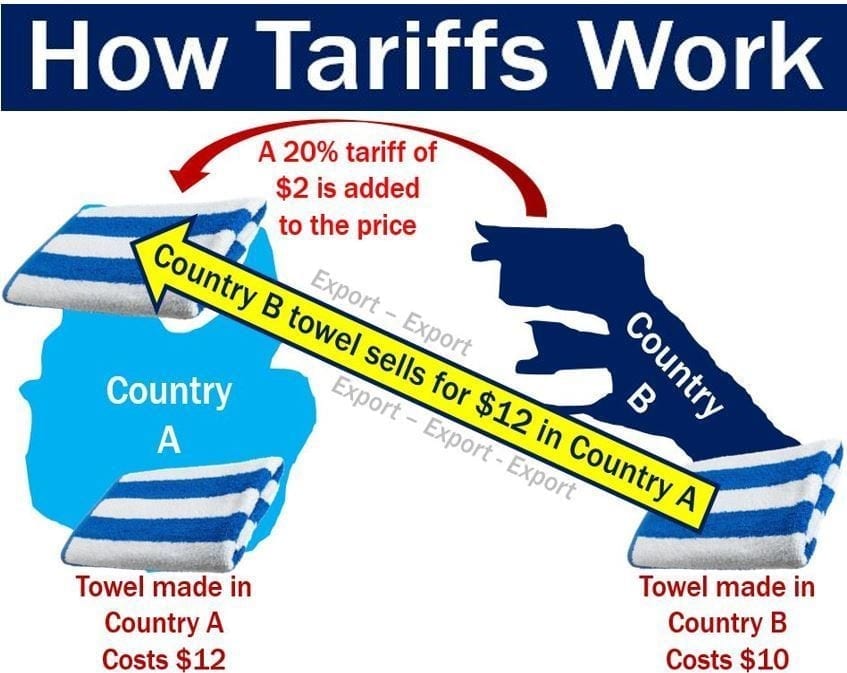

California Revenue Losses The Impact Of Trumps Tariffs

May 16, 2025

California Revenue Losses The Impact Of Trumps Tariffs

May 16, 2025 -

Bidens Mental State Questioned Warrens Unsuccessful Response

May 16, 2025

Bidens Mental State Questioned Warrens Unsuccessful Response

May 16, 2025 -

The Case For Jimmy Butler A Superior Alternative To Kevin Durant For The Warriors

May 16, 2025

The Case For Jimmy Butler A Superior Alternative To Kevin Durant For The Warriors

May 16, 2025 -

Should You Take Creatine A Comprehensive Review

May 16, 2025

Should You Take Creatine A Comprehensive Review

May 16, 2025