OpenAI's ChatGPT: The FTC Investigation And Future Of AI

Table of Contents

The FTC's Concerns Regarding ChatGPT

The FTC's investigation into ChatGPT focuses on several key areas, each raising serious concerns about the responsible development and deployment of large language models (LLMs).

Data Privacy and Security

The FTC is investigating potential violations of consumer privacy laws concerning the collection, use, and storage of personal data used to train ChatGPT. This massive dataset, used to fine-tune the model's abilities, potentially contains sensitive personal information. Concerns exist regarding the unintentional disclosure of sensitive information through prompts and responses. For example, a user's prompt might inadvertently reveal private details, and the model's response could unintentionally echo or infer other sensitive information from its training data.

- Lack of transparency in data usage: Users may not fully understand how their data is being used to train and improve the model.

- Potential for data breaches: The vast amounts of data involved create a significant target for cyberattacks, potentially exposing sensitive user information.

- Insufficient safeguards for children's data: The use of ChatGPT by minors raises particular concerns about the protection of children's data and their vulnerability to online harms. The FTC is likely investigating whether OpenAI has implemented sufficient safeguards to comply with regulations like COPPA (Children's Online Privacy Protection Act).

Algorithmic Bias and Discrimination

The FTC is scrutinizing whether ChatGPT's algorithms perpetuate existing societal biases, leading to unfair or discriminatory outcomes. Because ChatGPT learns from the data it's trained on, if that data reflects existing biases, the model will likely replicate them. This can manifest in biased responses in certain contexts or disproportionate impact on specific demographic groups. For example, the model might exhibit gender or racial biases in its responses to certain prompts.

- Analysis of output for bias: The FTC is likely undertaking a thorough analysis of ChatGPT's outputs to identify and quantify any biases present.

- Mitigation strategies for algorithmic fairness: OpenAI's strategies for mitigating bias in the model's training and operation are under scrutiny.

- Addressing potential for discriminatory outputs: The FTC is assessing the potential harm caused by discriminatory outputs, and whether OpenAI has taken sufficient steps to prevent such outcomes.

Misinformation and the Spread of Falsehoods

ChatGPT's ability to generate realistic-sounding but factually incorrect information raises concerns about the spread of misinformation and disinformation. The model's capacity to convincingly create fake news or propaganda represents a significant risk. The FTC is assessing the potential for malicious use of ChatGPT, both by individuals and potentially state-sponsored actors.

- Detection of fabricated content: The challenge of detecting content generated by AI, like ChatGPT, is a key focus of the investigation.

- Development of safeguards against malicious use: OpenAI's efforts to prevent the misuse of ChatGPT for the creation and dissemination of falsehoods are under scrutiny.

- Fact-checking and verification mechanisms: The FTC is evaluating the effectiveness of any mechanisms OpenAI has in place to verify the accuracy of the information generated by ChatGPT.

Implications for the Future of AI Development

The FTC investigation into ChatGPT has far-reaching implications for the future of AI development.

Increased Regulatory Scrutiny

The FTC investigation signals a broader trend toward increased government regulation of AI technologies. This is likely to include stricter data privacy laws, requirements for algorithmic transparency, and potentially liability frameworks for AI-related harms.

The Need for Ethical AI Development

The investigation underscores the critical need for ethical considerations to be integrated into the design and deployment of AI systems. This involves careful consideration of potential biases, data privacy implications, and the potential for misuse.

Impact on Innovation

While regulation is necessary, it's crucial to ensure that it doesn't stifle innovation in the AI sector. A balanced approach is needed that promotes responsible development while avoiding overly burdensome regulations that hinder progress.

- Balancing innovation with responsible development: Finding this balance will require collaboration between regulators, researchers, and industry stakeholders.

- Establishing clear ethical guidelines for AI: The development of industry-wide ethical guidelines and standards is crucial for guiding AI development.

- Promoting transparency and accountability in AI systems: Increased transparency in AI algorithms and data usage is essential for building trust and accountability.

The Future of ChatGPT and Similar AI Models

The FTC investigation will significantly shape the future of ChatGPT and other similar AI models.

Adaptation to Regulatory Changes

OpenAI and other AI developers will need to adapt their models and practices to comply with emerging regulations. This likely involves significant changes in data handling, algorithm design, and internal governance structures.

Enhanced Safety and Transparency Measures

Expect to see improvements in data security, bias mitigation, and mechanisms to prevent the spread of misinformation. These improvements are likely to involve increased investment in technical solutions and human oversight.

Evolution of AI Governance

The outcome of the FTC investigation will likely shape the future of AI governance and the development of industry best practices. It could lead to new regulations, industry self-regulation initiatives, and increased international cooperation on AI governance.

- Improved data privacy controls: Enhanced encryption, data anonymization techniques, and stricter access controls are likely to be implemented.

- More robust content moderation systems: More sophisticated systems will be needed to detect and remove harmful or misleading content generated by AI.

- Increased transparency in model training and data usage: Greater transparency will be necessary to build trust and enable external audits of AI systems.

Conclusion

The FTC investigation into OpenAI's ChatGPT marks a pivotal moment for the AI industry. The concerns raised highlight the urgent need for responsible AI development that prioritizes ethical considerations, data privacy, and the prevention of harm. The future of ChatGPT and similar AI models will depend on the industry's ability to adapt to increased regulatory scrutiny and implement robust safeguards to mitigate potential risks. By prioritizing ethical AI development and transparency, we can harness the transformative power of ChatGPT while minimizing its potential downsides. Learn more about the implications of the FTC investigation and the future of ChatGPT by exploring further resources and staying updated on the latest developments.

Featured Posts

-

Blue Origin Postpones Launch Unspecified Vehicle Subsystem Problem

May 05, 2025

Blue Origin Postpones Launch Unspecified Vehicle Subsystem Problem

May 05, 2025 -

The Crypto Party 48 Hours Of Unforgettable Events

May 05, 2025

The Crypto Party 48 Hours Of Unforgettable Events

May 05, 2025 -

Ruth Buzzi 88 A Career In Comedy And Childrens Television Remembered

May 05, 2025

Ruth Buzzi 88 A Career In Comedy And Childrens Television Remembered

May 05, 2025 -

King Charles Refusal To Communicate Harrys Security Battle

May 05, 2025

King Charles Refusal To Communicate Harrys Security Battle

May 05, 2025 -

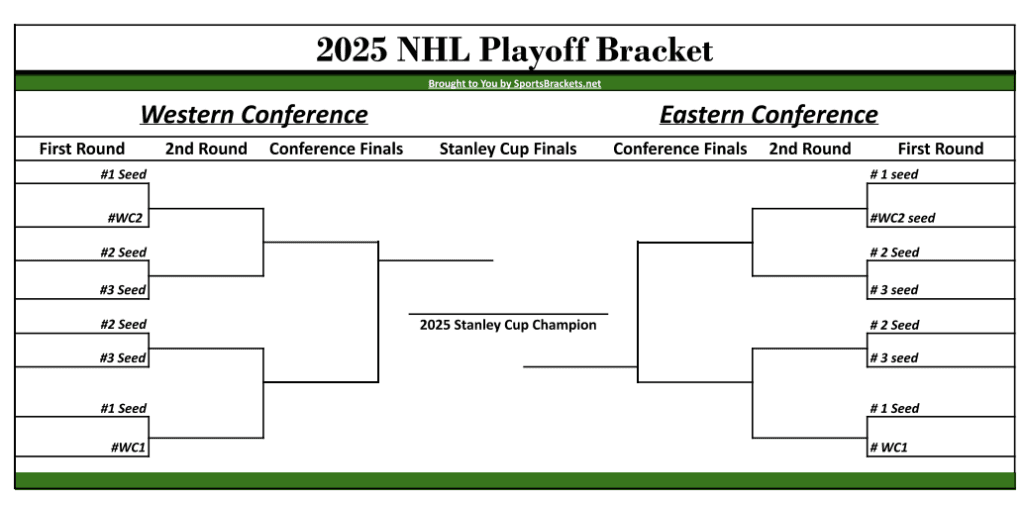

Nhl Standings Crucial Friday Matchups And Playoff Scenarios

May 05, 2025

Nhl Standings Crucial Friday Matchups And Playoff Scenarios

May 05, 2025

Latest Posts

-

Will The Oilers Rebound Against The Canadiens A Morning Coffee Preview

May 05, 2025

Will The Oilers Rebound Against The Canadiens A Morning Coffee Preview

May 05, 2025 -

Oilers Vs Canadiens Morning Coffee Predictions And Bounce Back Potential

May 05, 2025

Oilers Vs Canadiens Morning Coffee Predictions And Bounce Back Potential

May 05, 2025 -

Nhl Highlights Avalanche Defeat Panthers Despite Late Comeback

May 05, 2025

Nhl Highlights Avalanche Defeat Panthers Despite Late Comeback

May 05, 2025 -

Johnston And Rantanen Power Avalanche To Victory Over Panthers

May 05, 2025

Johnston And Rantanen Power Avalanche To Victory Over Panthers

May 05, 2025 -

First Round Nhl Playoffs Key Factors And Predictions

May 05, 2025

First Round Nhl Playoffs Key Factors And Predictions

May 05, 2025