OpenAI's ChatGPT Under FTC Scrutiny: Implications For AI Development

Table of Contents

The FTC's Concerns Regarding ChatGPT and AI Development

The FTC's investigation into ChatGPT stems from several key areas of concern regarding the development and deployment of this powerful generative AI tool. These concerns touch upon fundamental ethical and legal issues surrounding data privacy, algorithmic bias, and the potential for misuse.

Data Privacy Violations

ChatGPT's ability to process and generate human-like text raises significant data privacy concerns. The potential for breaches includes:

- Unauthorized data collection: The model may inadvertently collect and store personal information from user inputs without explicit consent.

- Insecure data storage: The storage and handling of user data may not meet sufficient security standards, increasing the risk of data breaches and leaks.

- Lack of user consent for data usage: Users may not be fully aware of how their data is being used to train and improve the model.

- Potential for personal data leaks: Data breaches could expose sensitive personal information, violating user privacy and potentially leading to identity theft or other harms.

These concerns directly relate to FTC regulations and existing privacy laws such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. Ensuring data privacy in large language models (LLMs) like ChatGPT presents significant challenges due to the sheer volume of data processed and the complexity of the algorithms involved. The FTC is likely scrutinizing OpenAI's data handling practices to determine compliance with these existing regulations.

Algorithmic Bias and Fairness

Another key concern revolves around algorithmic bias and fairness. ChatGPT, like many AI models, is trained on massive datasets that may reflect existing societal biases. This can lead to:

- Bias in training data leading to discriminatory outputs: The model may generate responses that perpetuate stereotypes or discriminate against certain groups.

- Reinforcement of existing societal biases: The model's outputs can inadvertently reinforce harmful stereotypes and prejudices, potentially leading to discriminatory outcomes.

- Lack of transparency in algorithm development: The lack of transparency in how the algorithm is developed and trained can make it difficult to identify and address biases.

The ethical implications of biased AI are profound. Biased outputs can disproportionately affect marginalized communities, leading to unfair or discriminatory treatment. Mitigating bias in AI models requires careful consideration of the training data, rigorous testing for bias, and ongoing monitoring of the model's performance. The FTC's focus on fairness underscores the importance of addressing these ethical concerns in AI development.

Misinformation and Misuse of ChatGPT

The ability of ChatGPT to generate human-quality text also raises concerns about misinformation and misuse. The potential risks include:

- Generation of false or misleading information: The model can generate convincingly realistic but entirely false information, which could be easily spread online.

- Use for malicious purposes such as creating deepfakes or spreading propaganda: The technology could be exploited to create convincing fake videos or audio recordings, or to spread disinformation at scale.

- Potential for manipulation and social engineering: ChatGPT could be used to manipulate individuals or groups, for example, by creating personalized phishing attacks or spreading targeted propaganda.

Detecting and mitigating the spread of AI-generated misinformation is a major challenge. The responsibility of AI developers in preventing the misuse of their technology is paramount. The FTC's investigation likely aims to determine whether OpenAI took sufficient measures to prevent the misuse of ChatGPT.

Implications for the Future of AI Development

The FTC's scrutiny of ChatGPT has significant implications for the future of AI development, impacting regulatory landscapes, industry practices, and the overall focus on responsible AI.

Increased Regulatory Scrutiny

The FTC's investigation signals a likely increase in government regulation of AI. This could involve:

- Stricter data privacy laws: New laws might impose stricter requirements for data collection, storage, and usage in AI systems.

- Ethical guidelines for AI development: Governments may establish guidelines or standards for ethical AI development, addressing issues such as bias, transparency, and accountability.

While increased regulation could slow down the pace of AI innovation in the short term, it could also foster greater trust and accountability in the long run. The potential benefits of government oversight include enhanced user protection, reduced bias, and increased transparency.

Shifting Industry Practices

In response to the FTC's concerns, AI companies will likely adapt their practices. This might include:

- Improved data privacy measures: Companies might implement more robust data security protocols and obtain more explicit user consent for data usage.

- Bias mitigation techniques: Companies might invest in more sophisticated bias detection and mitigation techniques to ensure fairness in their AI models.

- Content moderation and safety measures: Companies might implement stricter content moderation and safety measures to prevent the misuse of their AI technology.

These changes could significantly impact the business models of AI companies, potentially increasing development costs and requiring changes in how they operate.

Focus on Responsible AI Development

The FTC's investigation underscores the growing importance of ethical considerations in AI development. This necessitates:

- Transparency in AI systems: Developers should strive for greater transparency in how their AI systems work, including their training data and algorithms.

- Accountability for AI outputs: Developers should establish mechanisms for accountability in case of errors or harmful outputs from their AI systems.

- User privacy protection: Prioritizing user data privacy and security should be a central tenet of AI development.

Best practices for responsible AI development involve a collaborative effort among developers, policymakers, and the public to ensure that AI technologies are developed and deployed ethically and beneficially.

Conclusion

The FTC's investigation into OpenAI's ChatGPT is a watershed moment for the AI industry. The scrutiny highlights the critical need for responsible AI development, emphasizing data privacy, algorithmic fairness, and the prevention of misuse. The implications are far-reaching, impacting not only OpenAI but the entire AI landscape. Going forward, AI companies must prioritize ethical considerations and transparency to foster public trust and ensure the beneficial development and deployment of AI technologies. Staying informed about the evolving landscape of ChatGPT regulation and AI development is crucial for all stakeholders. Understanding the implications of the FTC's scrutiny will be key to navigating the future of this rapidly changing field.

Featured Posts

-

Trump Administration Orders Penn To Erase Transgender Swimmers Records

May 01, 2025

Trump Administration Orders Penn To Erase Transgender Swimmers Records

May 01, 2025 -

Enexis Laadtarieven Optimaal Opladen In Noord Nederland

May 01, 2025

Enexis Laadtarieven Optimaal Opladen In Noord Nederland

May 01, 2025 -

Michael Sheens Generosity 1 Million Debt Relief Initiative

May 01, 2025

Michael Sheens Generosity 1 Million Debt Relief Initiative

May 01, 2025 -

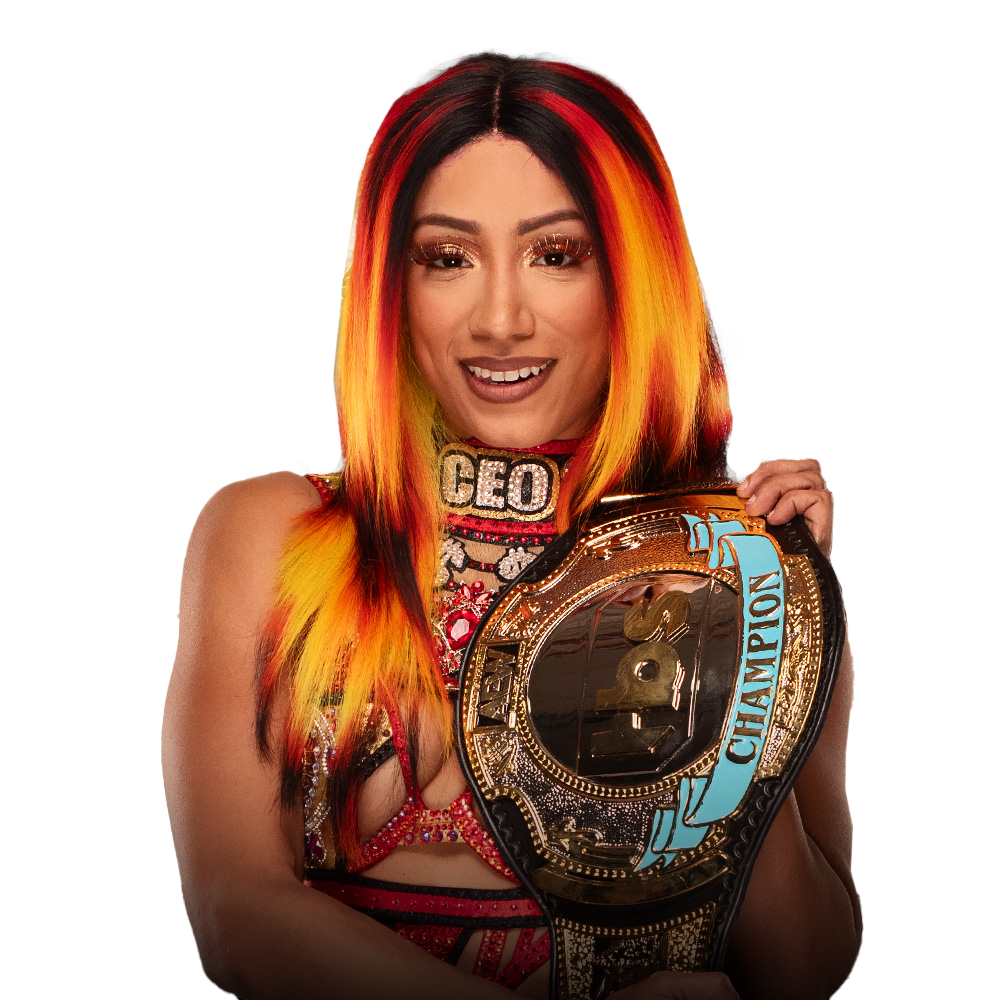

Mercedes Mone Seeks Tbs Championship From Momo Watanabe

May 01, 2025

Mercedes Mone Seeks Tbs Championship From Momo Watanabe

May 01, 2025 -

Amanda Holden Supports Davina Mc Call After Brain Tumour News

May 01, 2025

Amanda Holden Supports Davina Mc Call After Brain Tumour News

May 01, 2025

Latest Posts

-

Kansas City Royals Win Thriller Garcia Homer Witt Rbi Double Secure 4 3 Win Against Cleveland

May 01, 2025

Kansas City Royals Win Thriller Garcia Homer Witt Rbi Double Secure 4 3 Win Against Cleveland

May 01, 2025 -

Qlq Nady Alnsr Bsbb Arqam Jwanka Ma Hy Alasbab

May 01, 2025

Qlq Nady Alnsr Bsbb Arqam Jwanka Ma Hy Alasbab

May 01, 2025 -

Cleveland Guardians Beat New York Yankees 3 2 Bibees Strong Showing After Early Blow

May 01, 2025

Cleveland Guardians Beat New York Yankees 3 2 Bibees Strong Showing After Early Blow

May 01, 2025 -

Witt And Garcia Lead Royals To Victory Over Guardians 4 3 Final

May 01, 2025

Witt And Garcia Lead Royals To Victory Over Guardians 4 3 Final

May 01, 2025 -

Arqam Jwanka Tqlq Nady Alnsr Thlyl Shaml

May 01, 2025

Arqam Jwanka Tqlq Nady Alnsr Thlyl Shaml

May 01, 2025