Revolutionizing Voice Assistant Development: Key Announcements From OpenAI's 2024 Event

Table of Contents

Enhanced Natural Language Processing (NLP) Capabilities

OpenAI's 2024 announcements showcased significant strides in natural language processing (NLP), the cornerstone of intelligent voice assistants. The improvements focus on enabling voice assistants to understand the context, nuances, and intent behind user requests with unprecedented accuracy. This means a move away from simplistic keyword matching towards a deeper comprehension of human language.

- Improved Accuracy in Speech-to-Text Conversion: OpenAI's new models boast significantly improved accuracy in converting spoken words into text, even in noisy environments or with diverse accents. This leads to fewer misunderstandings and more reliable interactions.

- Enhanced Sentiment Analysis for More Personalized Responses: The ability to accurately gauge the user's emotional state allows for more empathetic and personalized responses. Voice assistants can now adapt their tone and provide tailored support based on the user's perceived mood.

- Better Handling of Multilingual Requests: OpenAI has expanded its language support, enabling voice assistants to seamlessly handle requests in multiple languages and dialects. This makes voice technology accessible to a far broader global audience.

- Integration of Advanced Contextual Understanding Models: The integration of advanced contextual understanding models allows the AI to maintain context across multiple turns of conversation, leading to more natural and fluid interactions. This eliminates the need for repetitive clarification and improves overall user experience.

Breakthroughs in Speech Recognition Technology

Beyond NLP, OpenAI unveiled breakthroughs in speech recognition technology itself. These advancements focus on enhancing the accuracy, speed, and robustness of speech recognition systems, making voice assistants even more reliable and responsive.

- Reduced Latency for Faster Responses: OpenAI has significantly reduced the latency of its speech recognition models, leading to almost instantaneous responses. This improves the overall user experience and makes interactions feel more natural and less delayed.

- Improved Accuracy in Noisy Environments: The new models exhibit superior performance in noisy environments, accurately transcribing speech even amidst background distractions. This is a crucial improvement for real-world usability.

- Support for a Wider Range of Accents and Dialects: OpenAI's commitment to inclusivity is evident in the expanded support for a wider range of accents and dialects. This makes voice assistants accessible to a more diverse population.

- Development of More Efficient Algorithms: The underlying algorithms have been optimized for greater efficiency, enabling faster processing and reduced computational requirements. This is particularly important for resource-constrained devices.

New Developer Tools and APIs for Voice Assistant Creation

OpenAI didn't just showcase advancements in AI; they also presented new and improved developer tools and APIs to simplify the process of building voice assistants. This democratizes access to cutting-edge voice technology, empowering a wider range of developers to participate in this exciting field.

- Easier Integration with Existing Platforms: The new APIs offer seamless integration with popular platforms and frameworks, making it easier for developers to incorporate voice capabilities into their existing applications and services.

- Simplified Model Training and Deployment: OpenAI has streamlined the model training and deployment process, reducing the technical expertise and time required to bring voice assistants to market.

- Access to Pre-trained Models for Faster Development: Developers can leverage pre-trained models to accelerate development, saving valuable time and resources. This lowers the barrier to entry for smaller teams and startups.

- New APIs for Custom Voice Assistant Creation: The introduction of new, more versatile APIs allows developers to create highly customized voice assistants tailored to specific needs and use cases.

Ethical Considerations and Responsible AI in Voice Assistant Development

OpenAI emphasized the importance of ethical considerations and responsible AI in voice assistant development. They highlighted several initiatives aimed at mitigating bias, ensuring privacy, and promoting transparency in AI voice assistant technology.

- Mitigation of Biases in Training Data: OpenAI is actively working to mitigate biases in the training data used to develop its models, ensuring fairness and inclusivity in voice assistant interactions.

- Improved Data Security and Privacy Measures: Robust data security and privacy measures are in place to protect user data and maintain confidentiality. This is paramount for building user trust.

- Guidelines for Ethical Voice Assistant Design: OpenAI has developed and shared guidelines for ethical voice assistant design, promoting responsible development practices within the community.

- Focus on User Control and Transparency: OpenAI is committed to providing users with greater control over their data and interactions, fostering transparency in how voice assistants function.

Conclusion: The Future of Voice Assistant Development with OpenAI

OpenAI's 2024 event showcased remarkable advancements in AI voice assistant technology, significantly impacting the landscape of voice assistant development. The improvements in NLP, speech recognition, and developer tools, coupled with a strong emphasis on ethical considerations, pave the way for a future where voice assistants are more intelligent, helpful, and trustworthy. Dive into the future of voice assistant development and explore the innovative tools and resources unveiled at OpenAI's 2024 event. Start building the next generation of AI voice assistants today!

Featured Posts

-

Brian Brobbey Physical Prowess Poses Europa League Threat

May 10, 2025

Brian Brobbey Physical Prowess Poses Europa League Threat

May 10, 2025 -

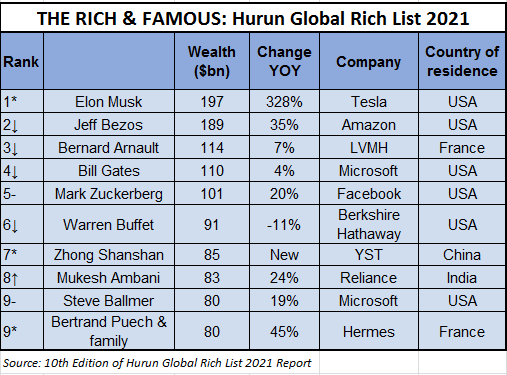

2025 Hurun Global Rich List Elon Musk Retains Top Spot Despite 100 Billion Loss

May 10, 2025

2025 Hurun Global Rich List Elon Musk Retains Top Spot Despite 100 Billion Loss

May 10, 2025 -

Nyt Strands Answers Saturday March 15 2024 Game 377

May 10, 2025

Nyt Strands Answers Saturday March 15 2024 Game 377

May 10, 2025 -

Beyond The Monkey Two More Exciting Stephen King Films Coming In 2024

May 10, 2025

Beyond The Monkey Two More Exciting Stephen King Films Coming In 2024

May 10, 2025 -

Arrestan A Universitaria Transgenero Por Usar Bano Femenino El Caso Que Genera Debate

May 10, 2025

Arrestan A Universitaria Transgenero Por Usar Bano Femenino El Caso Que Genera Debate

May 10, 2025