Revolutionizing Voice Assistant Development: OpenAI's 2024 Announcements

Table of Contents

Enhanced Natural Language Processing (NLP) Capabilities

OpenAI's advancements in NLP are dramatically improving the core functionality of voice assistants, making them significantly more accurate and natural-sounding. This enhanced NLP is driving a new era of human-computer interaction.

Improved Speech-to-Text and Text-to-Speech

OpenAI's latest models are delivering substantial improvements in both speech recognition and speech synthesis.

- Reduced latency in speech recognition: Users experience quicker responses, leading to smoother and more fluid conversations.

- More nuanced understanding of accents and dialects: Voice assistants are becoming more inclusive, accurately interpreting a wider range of accents and dialects, improving accessibility for a global user base.

- Improved text-to-speech synthesis with emotional inflection: Synthetic voices are becoming more expressive and human-like, conveying emotions more effectively and creating a more engaging user experience. This is achieved through sophisticated algorithms that analyze text for emotional cues.

OpenAI's Whisper model, for example, has significantly improved speech recognition accuracy, particularly in noisy environments and with various accents. These advancements in speech-to-text technology are crucial for accurate voice command interpretation and transcription services. Similarly, advancements in text-to-speech are making voice assistants more engaging and less robotic.

Contextual Understanding and Memory

One of the most significant advancements is the improved ability of voice assistants to understand context and maintain memory. This means they can now engage in more complex and natural conversations.

- Improved ability to maintain context across multiple turns in a conversation: The assistant remembers previous parts of the conversation, allowing for more fluid and relevant responses.

- Remembering past interactions within a session: This allows for a personalized experience tailored to the user's ongoing needs and preferences during a single session.

- Personalized responses based on user history: The voice assistant learns user preferences and adapts its responses accordingly, providing a more tailored and helpful experience over time.

OpenAI's models leverage advanced machine learning techniques like transformers to achieve this better contextual awareness. These transformer-based models excel at processing sequential data, making them ideally suited for understanding the flow of a conversation.

Advanced Personalization and Customization

OpenAI is pushing the boundaries of personalization, making voice assistants truly adapt to individual users.

User-Specific Voice Profiles

Creating a unique and personalized voice experience is now easier than ever.

- Ability to adapt to individual speech patterns, preferences, and communication styles: The system learns the nuances of a user's voice and adapts to their communication style, making interactions feel more natural and personalized.

- Custom voice creation options: Users may have greater control over the voice assistant's personality, tone, and even accent, allowing for a customized and unique experience.

- Integration with other personal data sources (with user consent): This integration allows for even deeper personalization, but ethical considerations and data privacy remain paramount.

This level of personalization significantly enhances user experience and satisfaction, making the interaction feel more intuitive and less like interacting with a generic system. However, it's crucial to ensure ethical data handling and transparent user consent practices.

Adaptive Learning and Behavior

Voice assistants are evolving beyond simple command execution; they're becoming proactive and adaptive.

- Learning user habits and preferences over time: The system observes user behavior and anticipates their needs based on past interactions and established patterns.

- Anticipating user needs: Proactive suggestions and reminders become possible, making the voice assistant a truly helpful tool rather than just a reactive one.

- Proactive suggestions and reminders: This proactive assistance enhances efficiency and streamlines daily tasks.

Reinforcement learning and other machine learning techniques are key to enabling this adaptive behavior. The voice assistant learns from user feedback and interactions, continually improving its ability to anticipate needs and provide helpful suggestions. This leads to increased user engagement and productivity.

Expanding Integration and Interoperability

OpenAI's advancements are fostering seamless integration across multiple platforms and devices.

Seamless Integration with Smart Home Devices

OpenAI's technology is making voice assistants the central control hub for the smart home.

- Improved compatibility with a wider range of smart home devices: This broader compatibility simplifies smart home management.

- Enhanced control and automation capabilities: Users can control multiple devices with voice commands, automating tasks and improving home efficiency.

- Unified voice control across multiple devices: This streamlined control eliminates the need for multiple apps or interfaces, simplifying user interaction.

The improved integration with smart home platforms like Google Home, Amazon Alexa, and Apple HomeKit further expands the capabilities and accessibility of voice-controlled devices.

Cross-Platform Compatibility and Accessibility

OpenAI's focus extends to making voice assistant technology accessible to everyone.

- Support for various operating systems and devices: Users can access voice assistant functionalities regardless of their preferred devices or operating systems.

- Improved accessibility features for users with disabilities (e.g., screen readers): Voice assistants become more inclusive, providing equal access to technology for individuals with disabilities.

- Integration with other apps and services: Seamless interaction with other apps expands functionality and creates a more cohesive digital experience.

Cross-platform compatibility and accessibility features are vital for creating a truly inclusive and user-friendly experience. The wider adoption of these features ensures that voice assistant technology benefits a broader range of users.

Conclusion

OpenAI's 2024 announcements represent a monumental shift in voice assistant development. The advancements in NLP, personalization, and integration promise a future where voice assistants are not just tools, but intuitive, intelligent partners in our daily lives. These breakthroughs are redefining the possibilities of voice assistant technology, leading to more efficient, engaging, and personalized experiences. To stay ahead in this rapidly evolving field, explore OpenAI's resources and learn how you can leverage these advancements to revolutionize your own voice assistant development projects. The future of voice assistant development is bright, and OpenAI is leading the charge.

Featured Posts

-

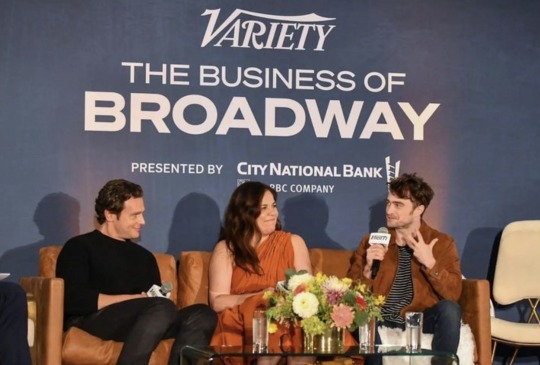

Jonathan Groffs Just In Time A Night Of Support From Famous Friends

May 23, 2025

Jonathan Groffs Just In Time A Night Of Support From Famous Friends

May 23, 2025 -

Roger Daltreys Hearing And Sight The Impact Of Age On The Who Legend

May 23, 2025

Roger Daltreys Hearing And Sight The Impact Of Age On The Who Legend

May 23, 2025 -

Ooredoo And Qtspbf A Winning Partnership Continues

May 23, 2025

Ooredoo And Qtspbf A Winning Partnership Continues

May 23, 2025 -

Jonathan Groff Eyes Tony Award Glory With Just In Time

May 23, 2025

Jonathan Groff Eyes Tony Award Glory With Just In Time

May 23, 2025 -

Colours Of Time Cedric Klapisch Film Sold By Studiocanal At Cannes

May 23, 2025

Colours Of Time Cedric Klapisch Film Sold By Studiocanal At Cannes

May 23, 2025

Latest Posts

-

Jonathan Groff Eyes Tony Award Glory With Just In Time

May 23, 2025

Jonathan Groff Eyes Tony Award Glory With Just In Time

May 23, 2025 -

Is Jonathan Groffs Just In Time Performance Tony Worthy

May 23, 2025

Is Jonathan Groffs Just In Time Performance Tony Worthy

May 23, 2025 -

Jonathan Groff Tony Award Nomination Potential For Just In Time

May 23, 2025

Jonathan Groff Tony Award Nomination Potential For Just In Time

May 23, 2025 -

Jonathan Groff And Just In Time A Tony Awards Prediction

May 23, 2025

Jonathan Groff And Just In Time A Tony Awards Prediction

May 23, 2025 -

Could Jonathan Groff Make Tony Awards History With Just In Time

May 23, 2025

Could Jonathan Groff Make Tony Awards History With Just In Time

May 23, 2025