The Dangers Of AI Therapy: Surveillance And Control In A Police State

Table of Contents

Imagine a future where your thoughts and emotions, revealed through AI-powered therapy, are used not for healing, but for control. This isn't science fiction; the potential for AI therapy to become a tool of surveillance and oppression in a police state is a chilling reality we must confront. This article explores the frightening implications of AI therapy surveillance and the urgent need for ethical safeguards.

<h2>Data Privacy Concerns in AI Therapy</h2>

The allure of AI-powered mental health solutions is undeniable. Apps and platforms promise personalized support, readily available resources, and data-driven insights into mental well-being. However, this convenience comes at a cost: our personal data. The vulnerability of personal data collected through AI therapy apps and platforms is a significant concern regarding AI therapy surveillance.

- Lack of robust data encryption and security protocols: Many AI therapy platforms lack the robust encryption and security measures needed to protect sensitive patient information. This leaves personal data vulnerable to hacking and data breaches.

- Potential for data breaches and unauthorized access: A data breach could expose highly sensitive information like emotional states, personal struggles, and treatment plans, leading to significant harm and privacy violations.

- Unclear terms of service regarding data ownership and usage: Often, terms of service are opaque, leaving users unclear about how their data will be used, stored, and potentially shared. This lack of transparency is a major impediment to informed consent.

- The potential for data to be sold or used for targeted advertising without user consent: Data collected through AI therapy could be incredibly valuable for targeted advertising, creating a conflict of interest for companies handling sensitive mental health data.

- The lack of transparency in data processing methods: Users often lack visibility into how their data is processed by AI algorithms, raising concerns about potential biases and misuse.

These issues highlight the critical need for stronger AI therapy data security and robust data protection AI legislation. The risks associated with AI therapy data security are too great to ignore.

<h2>The Potential for Governmental Surveillance and Control</h2>

The potential for governmental surveillance using AI therapy data is perhaps the most alarming aspect of AI therapy surveillance. The ability of AI algorithms to analyze vast quantities of personal data opens the door to unprecedented levels of mass surveillance and social control.

- AI algorithms identifying individuals deemed "at risk" based on emotional patterns: Algorithms might flag individuals exhibiting certain emotional patterns as potentially "unstable" or "dangerous," leading to preemptive surveillance and potential human rights abuses.

- Preemptive arrests or interventions based on AI analysis of therapy sessions: This scenario raises serious questions about due process and the potential for wrongful accusations and imprisonment based on algorithmic assessments.

- Use of AI-generated profiles to monitor and control dissent or opposition: Governments could use AI-generated profiles to identify and suppress dissent by monitoring individuals' emotional states and identifying potential threats to authority.

- The chilling effect on freedom of speech and expression when people fear their thoughts are monitored: The constant fear of surveillance can stifle open communication and self-expression, leading to a climate of fear and self-censorship.

- Integration of AI therapy data with other surveillance systems: AI therapy data could easily be integrated with existing surveillance networks, creating a comprehensive system of mass surveillance with far-reaching implications.

This potential for AI surveillance state necessitates urgent attention to the ethical implications of AI and authoritarianism and the development of safeguards to prevent its misuse.

<h3>Bias and Discrimination in AI Therapy Algorithms</h3>

The algorithms powering AI therapy systems are trained on data, and if this data reflects existing societal biases, the AI will inevitably perpetuate and even amplify those biases. This algorithmic discrimination therapy poses a serious threat to fairness and equity in mental health care.

- Bias in training data perpetuating existing societal inequalities: If the training data overrepresents certain demographics or viewpoints, the resulting AI system will likely be biased against underrepresented groups.

- AI algorithms misinterpreting or mischaracterizing certain emotional states or behaviors: Cultural differences in expressing emotions can lead to misinterpretations by algorithms trained on limited datasets.

- Discriminatory targeting of specific demographics based on AI analysis: Biased algorithms could lead to disproportionate targeting of certain groups for surveillance or intervention, exacerbating existing inequalities.

- Lack of oversight and accountability in algorithm development and deployment: The lack of transparency and accountability in the development and deployment of these algorithms makes it difficult to identify and address biases.

Addressing AI bias mental health requires careful consideration of the data used to train algorithms and ongoing monitoring for discriminatory outcomes.

<h2>The Erosion of Trust and the Doctor-Patient Relationship</h2>

The potential for AI surveillance fundamentally undermines the sanctity of the therapeutic relationship. The very foundation of effective therapy is trust – trust between patient and therapist. AI therapy surveillance erodes this trust.

- Patients less likely to disclose sensitive information if they fear surveillance: Knowing their thoughts and feelings are being monitored will undoubtedly deter patients from fully engaging in therapy.

- Potential for therapists to be influenced by AI algorithms and data analysis: Therapists may become unduly influenced by AI recommendations, potentially compromising their clinical judgment.

- Weakening of the patient’s autonomy and self-determination: AI-driven interventions can undermine a patient's autonomy and ability to make informed decisions about their own care.

- Ethical dilemmas for therapists regarding data sharing and patient confidentiality: Therapists face difficult ethical dilemmas when navigating the complexities of data sharing and patient confidentiality in the context of AI-powered therapy.

Protecting patient confidentiality AI and fostering trust in AI healthcare are paramount to ensure ethical and effective mental health care. The ethical implications of AI therapy must be at the forefront of all development and deployment.

<h2>Conclusion</h2>

The potential for AI therapy to be weaponized for surveillance and control in a police state is a serious threat to individual liberty and privacy. The lack of robust data protection, potential for algorithmic bias, and erosion of trust in the therapeutic relationship raise profound ethical concerns. We must advocate for strong regulations, transparency in data handling, and ethical guidelines to prevent AI therapy from becoming a tool of oppression. We need a proactive approach to ensure the ethical development and deployment of AI therapy, safeguarding individual rights and preventing the creation of an AI-powered surveillance state. Let's work together to prevent the misuse of AI therapy surveillance and protect our fundamental freedoms.

Featured Posts

-

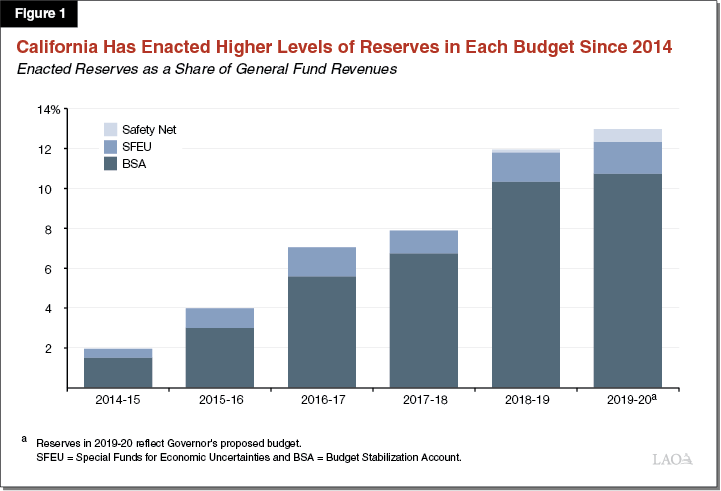

Trumps Trade Policies And Californias Budget A 16 Billion Deficit

May 16, 2025

Trumps Trade Policies And Californias Budget A 16 Billion Deficit

May 16, 2025 -

Bmw And Porsches China Challenges A Broader Industry Issue

May 16, 2025

Bmw And Porsches China Challenges A Broader Industry Issue

May 16, 2025 -

Los Angeles Wildfires And The Growing Market For Disaster Gambling

May 16, 2025

Los Angeles Wildfires And The Growing Market For Disaster Gambling

May 16, 2025 -

En Directo Venezia Napoles

May 16, 2025

En Directo Venezia Napoles

May 16, 2025 -

Anthony Edwards Baby Mama Reacts To Reported Lack Of Parental Involvement

May 16, 2025

Anthony Edwards Baby Mama Reacts To Reported Lack Of Parental Involvement

May 16, 2025