The Limitations Of AI Learning: A Guide To Responsible Implementation

Table of Contents

Data Dependency and Bias in AI Learning

AI models are fundamentally data-driven. The quality, quantity, and characteristics of the data used to train these models significantly impact their performance and reliability. This leads to two critical AI limitations: bias and scarcity.

The Problem of Biased Datasets

AI algorithms learn from data, and if that data reflects existing societal biases, the resulting AI system will inevitably perpetuate and even amplify those biases. This is a significant concern regarding responsible AI.

- Facial recognition systems: Trained primarily on images of white faces, these systems often exhibit significantly lower accuracy rates for individuals with darker skin tones, highlighting the impact of biased datasets.

- Loan application processing: AI systems trained on historical loan data, which may reflect existing lending biases, can unfairly disadvantage certain demographic groups, perpetuating existing inequalities.

- Solutions: Addressing biased datasets requires a multi-pronged approach. This includes data augmentation to increase representation of underrepresented groups, careful data curation to identify and remove biased samples, and the implementation of algorithmic fairness techniques to mitigate bias in the model's decision-making process. These techniques are essential for achieving AI ethics in practice.

Data Scarcity and its Impact

Insufficient data is another major hurdle. The accuracy and generalizability of an AI model are directly linked to the amount and quality of data available for training.

- Rare disease AI: Developing AI for diagnosing and treating rare diseases is challenging due to the inherent scarcity of patient data.

- Strategies: To address data scarcity, researchers utilize techniques like data synthesis (creating artificial data), transfer learning (adapting models trained on large datasets to smaller datasets), and federated learning (training models on decentralized data sources without directly sharing the data).

Data Quality and Noise

Inaccurate, incomplete, or noisy data severely hampers AI learning and leads to unreliable predictions. High-quality data is a prerequisite for trustworthy AI.

- Incorrect data labeling: Errors in labeling training data can lead to models that learn and produce inaccurate results. This is a critical aspect of AI transparency.

- Data auditing and cleaning: Regular audits and rigorous data cleaning processes are crucial to ensure data quality and minimize the impact of noisy data. This contributes directly to improving AI explainability.

Explainability and Transparency Challenges in AI

Many powerful AI models, particularly deep neural networks, operate as "black boxes." Their decision-making processes are opaque and difficult to understand, posing significant challenges for AI implementation.

The "Black Box" Problem

The lack of transparency in complex AI models hinders trust and accountability. Understanding why an AI system arrived at a particular decision is vital, especially in high-stakes applications.

- Erosion of trust: Opaque AI systems can erode public trust and hinder widespread adoption.

- Explainable AI (XAI): XAI techniques aim to improve the interpretability of AI models, offering insights into their internal workings and decision-making processes. This is vital for responsible AI implementation.

Difficulty in Debugging and Error Detection

Identifying and correcting errors in complex AI systems can be incredibly challenging. This is a major AI limitation.

- High-stakes applications: In areas like healthcare and autonomous driving, errors can have severe consequences.

- Robust testing and validation: Rigorous testing and validation procedures are essential to identify and mitigate errors before deployment. This is essential for achieving reliable and safe AI implementation.

Generalization and Adaptability Limitations

AI models are trained on specific datasets and may struggle to generalize their learning to new, unseen data or adapt to changing environments. This is a critical aspect of AI learning limitations.

Overfitting and Underfitting

- Overfitting: A model that overfits performs well on the training data but poorly on new, unseen data.

- Underfitting: A model that underfits is too simplistic to capture the complexities of the data.

- Mitigation: Careful model selection, regularization techniques, and cross-validation help mitigate these issues.

Limited Adaptability to New Environments

AI models often lack the ability to adapt to dynamic environments and unforeseen circumstances.

- Reinforcement learning and continual learning: These approaches aim to improve the adaptability and robustness of AI systems. They address crucial AI limitations.

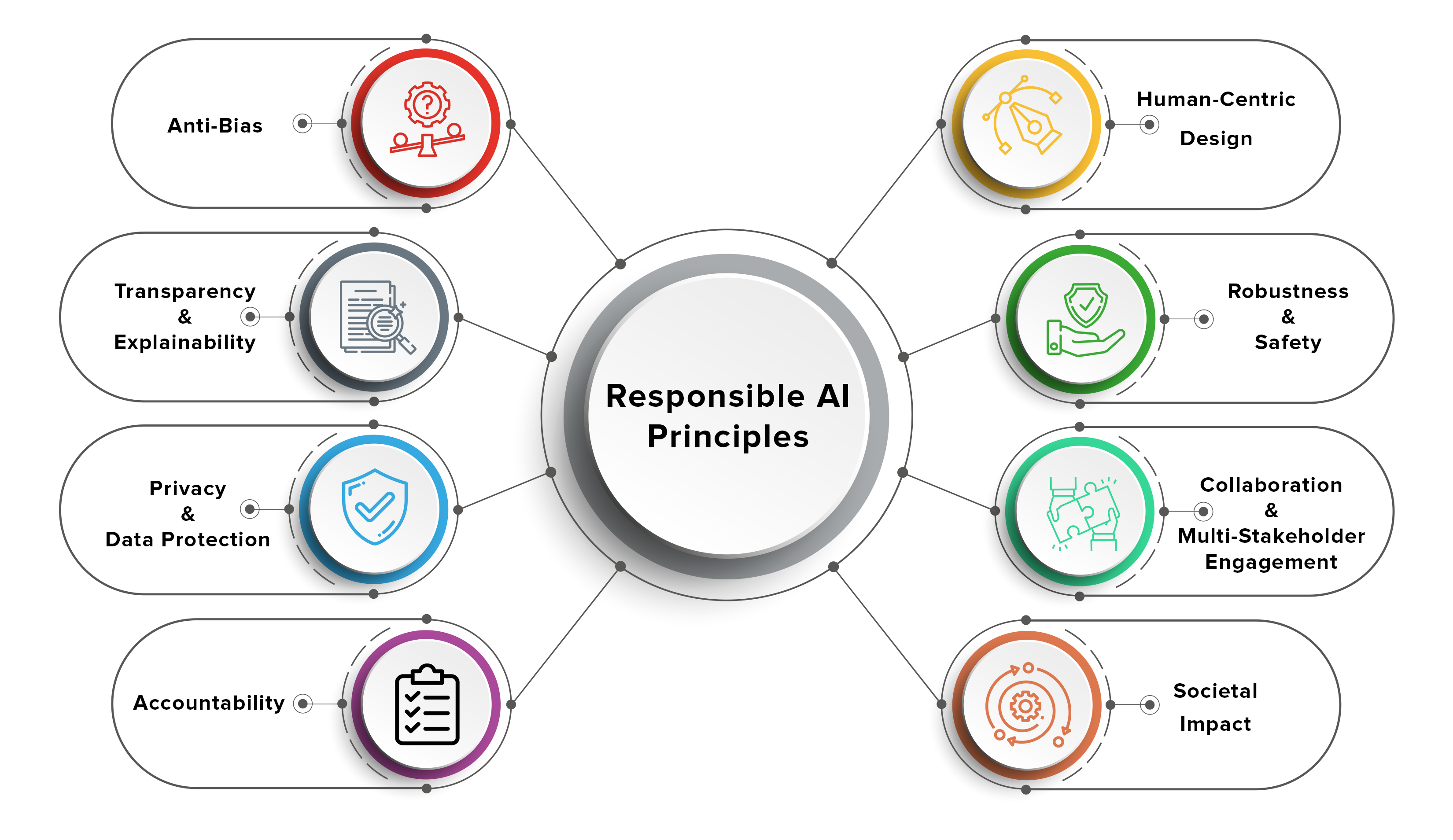

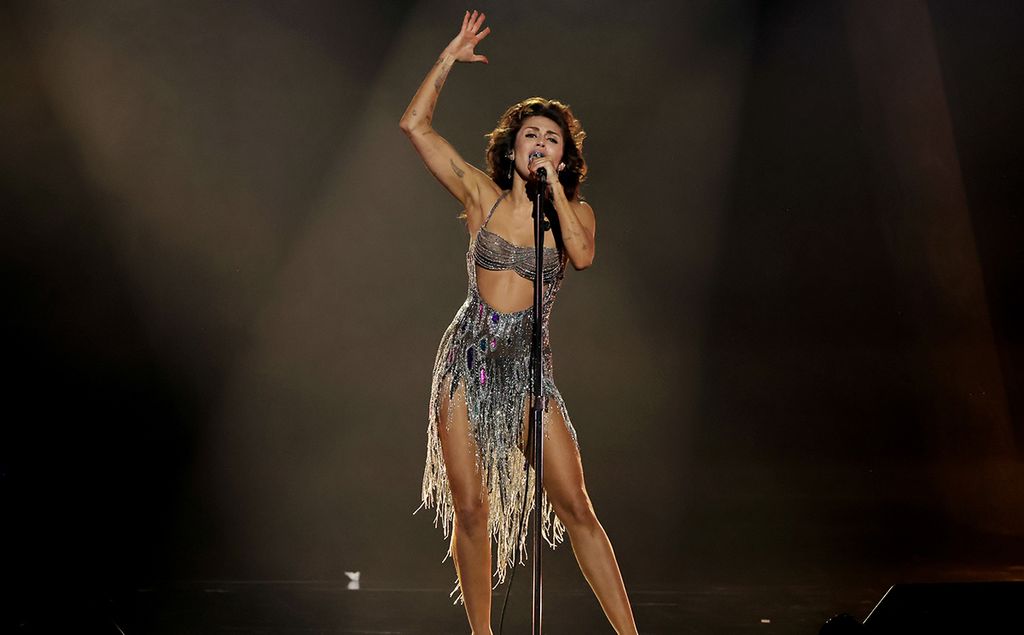

Ethical Considerations in AI Implementation

The deployment of AI systems raises several ethical considerations, particularly concerning bias, privacy, and security. This emphasizes the importance of responsible AI.

Algorithmic Bias and Discrimination

As discussed previously, biased data leads to biased algorithms, resulting in unfair or discriminatory outcomes.

- Ethical guidelines and regulations: Establishing clear ethical guidelines and regulations is essential to prevent and mitigate algorithmic bias.

Privacy and Security Concerns

AI systems frequently handle sensitive personal data, raising significant privacy and security concerns.

- Data anonymization, encryption, and robust security measures: Strong security protocols are vital to protect sensitive data and prevent unauthorized access. This is essential for responsible AI implementation.

Conclusion

This guide has explored key AI limitations, including data dependency, explainability challenges, generalization issues, and ethical concerns. Understanding these limitations is paramount for responsible AI implementation. By acknowledging these constraints and proactively addressing them through careful data management, algorithmic transparency, robust testing, and a strong ethical framework, we can harness the transformative power of AI while mitigating its potential risks. To learn more about building ethical and effective AI systems, delve deeper into the research surrounding responsible AI and explore strategies for overcoming the limitations of AI learning. Remember, responsible AI implementation is key to unlocking AI's full potential while minimizing potential harm.

Featured Posts

-

Bernard Kerik 9 11 Nyc Police Commissioner Dead At 69

May 31, 2025

Bernard Kerik 9 11 Nyc Police Commissioner Dead At 69

May 31, 2025 -

March 26th Remembering The Francis Scott Key Bridge Collapse In Baltimore

May 31, 2025

March 26th Remembering The Francis Scott Key Bridge Collapse In Baltimore

May 31, 2025 -

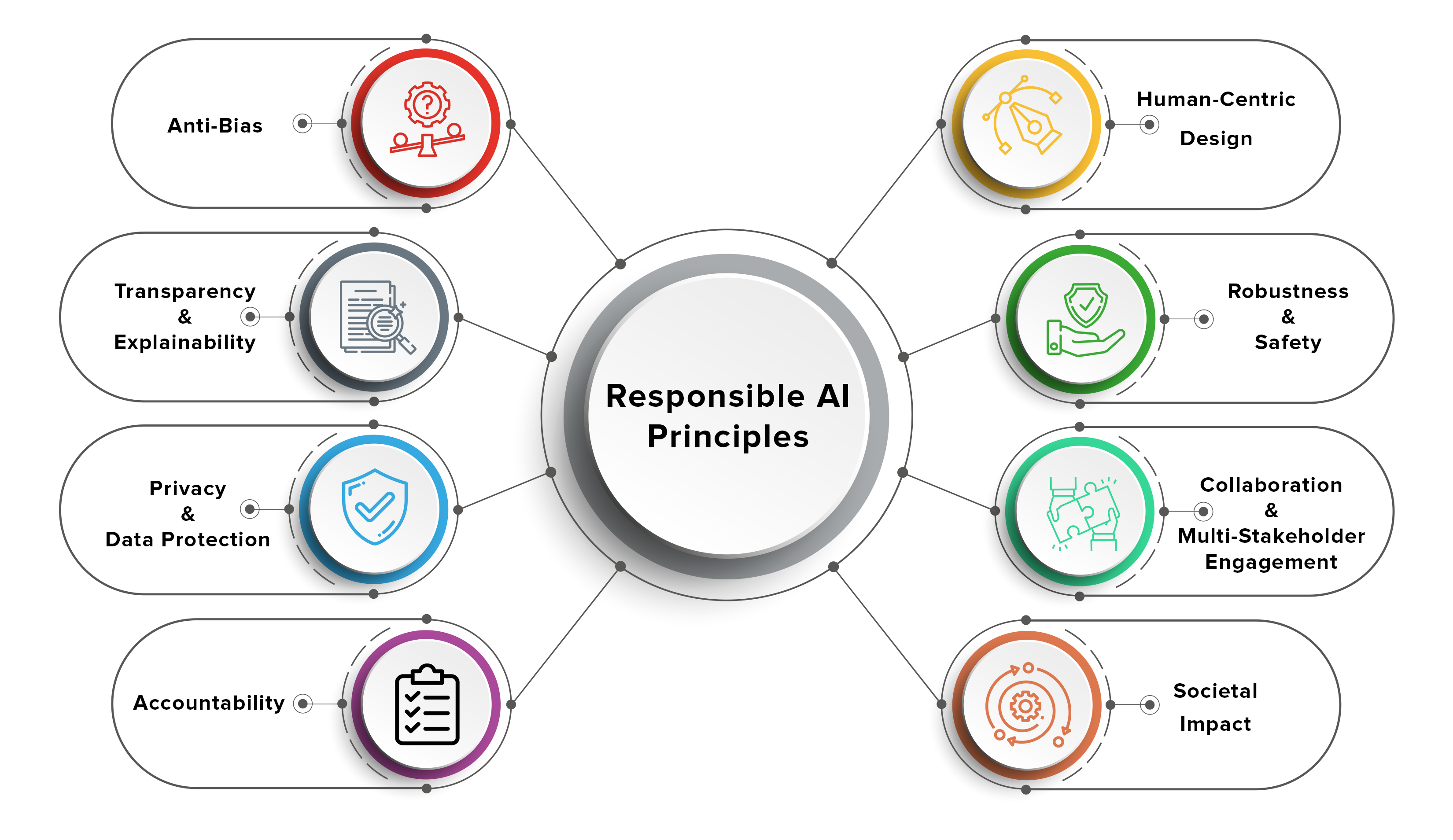

Pengaruh Budaya Pada Busana Miley Cyrus Sebuah Analisis

May 31, 2025

Pengaruh Budaya Pada Busana Miley Cyrus Sebuah Analisis

May 31, 2025 -

Munichs Bmw Open 2025 Zverev Battles Griekspoor In Quarter Finals

May 31, 2025

Munichs Bmw Open 2025 Zverev Battles Griekspoor In Quarter Finals

May 31, 2025 -

Solve The Nyt Mini Crossword March 16 2025 Answers And Hints

May 31, 2025

Solve The Nyt Mini Crossword March 16 2025 Answers And Hints

May 31, 2025