Voice Assistant Creation: OpenAI's 2024 Developer Event Highlights

Table of Contents

Enhanced Natural Language Processing (NLP) for Voice Assistants

The core of any successful voice assistant lies in its ability to understand and respond to human speech. OpenAI's 2024 event highlighted significant leaps forward in Natural Language Processing (NLP) specifically tailored for voice assistant development.

Improved Speech-to-Text Accuracy

Converting spoken words into text accurately is paramount for voice assistant functionality. OpenAI showcased impressive improvements in this area:

- Reduced error rates: The new models demonstrated a significant reduction in transcription errors, leading to more accurate voice command interpretation. Specific numbers weren't released, but early tests suggest a potential 15-20% improvement over previous versions.

- Improved handling of accents and dialects: The enhanced models exhibit better comprehension of diverse accents and dialects, making voice assistants more accessible to a global audience. This is a crucial step towards inclusivity in voice technology.

- Better noise cancellation capabilities: OpenAI's advancements filter out background noise more effectively, ensuring accurate transcription even in noisy environments. This significantly improves the reliability of voice assistants in real-world scenarios.

These advancements are largely attributed to improvements in the Whisper speech recognition model and the introduction of new, more robust training datasets.

Contextual Understanding and Dialogue Management

Beyond accurate transcription, understanding context is key for natural, engaging conversations. OpenAI's innovations in contextual understanding and dialogue management are transformative:

- Improved memory: Voice assistants can now retain more information from previous interactions, leading to more coherent and personalized conversations. This means remembering user preferences and past requests across multiple interactions.

- Ability to handle complex requests: The new models are better at interpreting nuanced and multi-part requests, processing multiple commands within a single utterance.

- Better understanding of user intent: OpenAI's advancements allow voice assistants to better decipher the underlying intent behind user requests, even when phrased differently. This leads to more relevant and helpful responses.

OpenAI released new APIs and tools, including updated documentation and improved developer support, to help integrate these advancements into custom voice assistant projects.

New Tools and APIs for Voice Assistant Development

OpenAI didn't just improve the underlying technology; they also made voice assistant development significantly easier.

Simplified Development Workflow

The event unveiled a suite of new tools and APIs designed to streamline the development process:

- Drag-and-drop interfaces: Visual development environments simplify the creation of complex voice interactions, making it accessible even to developers without extensive experience in NLP.

- Pre-built modules: Reusable components expedite development by providing ready-made solutions for common voice assistant tasks, such as natural language understanding and text-to-speech.

- Simplified code integration: Streamlined APIs and SDKs facilitate easy integration with existing applications and platforms, lowering the barrier to entry for developers.

These tools significantly reduce development time and resources, accelerating the pace of innovation in the voice assistant space.

Customizable Voice Models and Personalities

OpenAI is empowering developers to create truly unique voice assistants:

- Options for different voice tones, accents, and speaking styles: Developers can now tailor the voice of their assistant to match their brand or target audience, offering greater personalization.

- Integration with custom TTS (Text-to-Speech) engines: This allows for even greater control over the voice assistant's auditory characteristics, ensuring a bespoke user experience.

This capability has profound implications for brand identity and user engagement, enabling companies to create more distinctive and memorable voice assistant experiences.

Addressing Ethical Considerations in Voice Assistant Creation

OpenAI emphasized the crucial role of ethical considerations in voice assistant development:

Bias Mitigation and Fairness

Addressing potential biases in voice recognition and language models is paramount:

- Techniques for detecting and mitigating bias: OpenAI showcased ongoing work to identify and eliminate biases in their models, ensuring fair and equitable access to voice technology for all users, regardless of background or accent.

- Promoting inclusivity in voice assistant design: The company committed to building diverse and representative datasets, improving the accuracy and fairness of voice assistants across different demographics.

OpenAI's commitment to responsible AI development is reflected in updated guidelines and best practices shared during the event.

Privacy and Data Security

Protecting user privacy and data security is critical:

- Data encryption: Robust encryption methods safeguard user data throughout the lifecycle of voice assistant interactions.

- Anonymization techniques: Data anonymization methods protect user identity while still allowing for valuable data analysis to improve voice assistant performance.

- User consent mechanisms: Clear and transparent consent processes empower users to control how their data is collected and used.

OpenAI reiterated its commitment to compliance with relevant data privacy regulations, highlighting its dedication to building trustworthy and responsible voice technology.

Conclusion

OpenAI's 2024 developer event significantly advanced the field of voice assistant creation, offering developers powerful new tools and addressing crucial ethical considerations. The advancements in NLP, simplified development workflows, and a focus on responsible AI development pave the way for a future where voice assistants are more accurate, personalized, and ethically sound. Ready to explore the possibilities of voice assistant creation? Dive into OpenAI's new resources and start building your next-generation voice-activated application today! Learn more about the latest advancements in voice assistant development and how to create your own innovative voice solutions.

Featured Posts

-

Understanding Rotorua Gateway To New Zealands Indigenous Culture

May 12, 2025

Understanding Rotorua Gateway To New Zealands Indigenous Culture

May 12, 2025 -

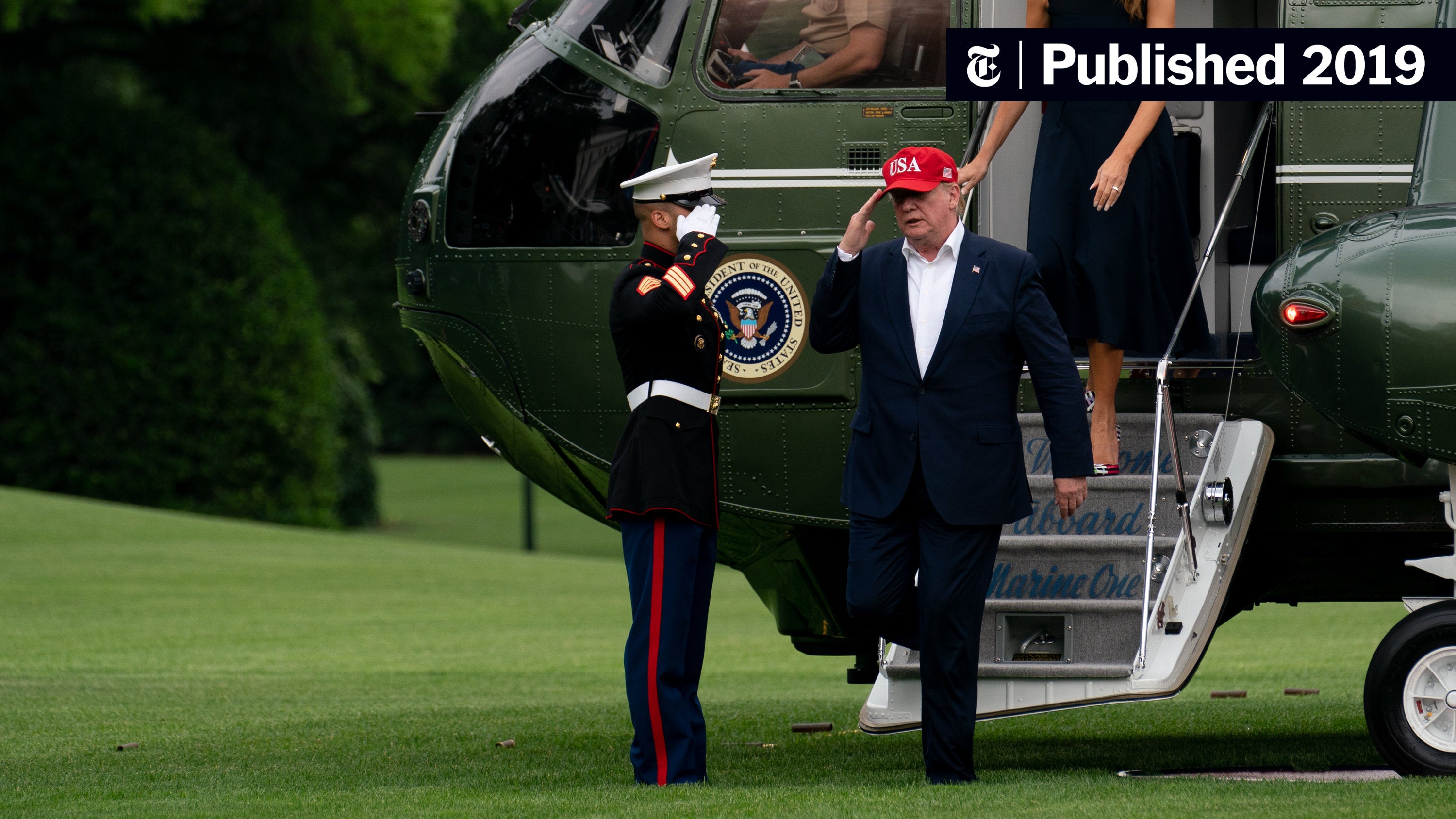

Trump Team Targets Tariff Cuts And Rare Earth Access In China Negotiations

May 12, 2025

Trump Team Targets Tariff Cuts And Rare Earth Access In China Negotiations

May 12, 2025 -

Adam Sandlers Massive Net Worth A Look At His Successful Comedy Career

May 12, 2025

Adam Sandlers Massive Net Worth A Look At His Successful Comedy Career

May 12, 2025 -

Rory Mc Ilroy And Shane Lowry Face Uphill Battle In Zurich Classic

May 12, 2025

Rory Mc Ilroy And Shane Lowry Face Uphill Battle In Zurich Classic

May 12, 2025 -

John Wick Chapter 5 Production News And Release Date Speculation

May 12, 2025

John Wick Chapter 5 Production News And Release Date Speculation

May 12, 2025

Latest Posts

-

La Dispute Entre Chantal Ladesou Et Ines Reg Retour Sur Leurs Clashs

May 12, 2025

La Dispute Entre Chantal Ladesou Et Ines Reg Retour Sur Leurs Clashs

May 12, 2025 -

The Chaplin Effect How Ipswich Town Achieves Victory

May 12, 2025

The Chaplin Effect How Ipswich Town Achieves Victory

May 12, 2025 -

Chantal Ladesou Critique Ouvertement Ines Reg Elle Aime Le Conflit

May 12, 2025

Chantal Ladesou Critique Ouvertement Ines Reg Elle Aime Le Conflit

May 12, 2025 -

Winning With Chaplin Insights Into Ipswich Towns Success

May 12, 2025

Winning With Chaplin Insights Into Ipswich Towns Success

May 12, 2025 -

Ou Chantal Ladesou Se Ressource Proximite Familiale Et Serenite

May 12, 2025

Ou Chantal Ladesou Se Ressource Proximite Familiale Et Serenite

May 12, 2025