Web Content And Google's AI: Training Data Despite Opt-Outs

Table of Contents

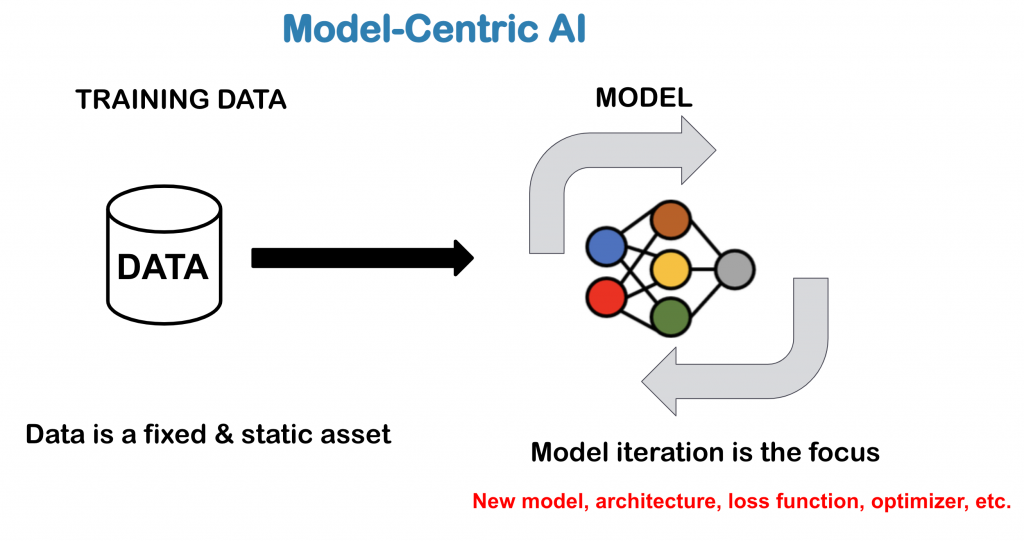

How Google's AI Crawls and Indexes Your Web Content

Google's AI prowess stems from its ability to process unimaginable quantities of data. This data is gathered primarily through Googlebot, a web crawler that systematically explores the internet, indexing billions of web pages. Googlebot follows links, reads text, analyzes images, and extracts metadata, effectively creating a massive digital library. This process isn't solely for search engine optimization (SEO); the information collected forms a crucial part of the training data for Google's diverse AI models, powering everything from search algorithms to image recognition and natural language processing.

- Googlebot's functions: Crawling, indexing, analyzing content structure, identifying keywords, extracting metadata.

- Scale of data collection: Googlebot crawls billions of pages daily, constantly updating its index.

- Types of content collected: Text, images, videos, audio, structured data (schema.org), metadata (title tags, descriptions).

This vast dataset, continuously refined and expanded, is the lifeblood of Google's AI. While we benefit from the improved search results and AI-powered services, understanding how this data is used, and the potential for unintended consequences, is crucial.

The Effectiveness (or Lack Thereof) of Opt-Out Mechanisms

Website owners often employ methods to limit web scraping, hoping to control how their content is used. These include:

- robots.txt: This file allows website owners to instruct search engines which parts of their site to avoid crawling.

- Meta tags (noindex, nofollow): These tags can prevent specific pages or links from being indexed.

However, these methods might not be entirely effective against sophisticated AI training data collection.

- Limitations of robots.txt: It's relatively easy to bypass, and it only controls access for compliant bots; many data scrapers ignore it entirely.

- Circumvention techniques: Sophisticated scrapers can easily circumvent robots.txt and meta tags, extracting data regardless of restrictions.

- Difficulty in controlling data: Once your content is publicly accessible, controlling its usage becomes incredibly challenging. Once scraped, the data is often used without your consent.

Ethical and Privacy Implications of Using Web Content for AI Training

The use of web content for AI training raises significant ethical and privacy concerns:

- Ethical concerns: Using vast amounts of user data without explicit consent raises questions about fairness and transparency. The lack of clear opt-out mechanisms raises major ethical flags.

- Privacy violations: Personal information embedded within web content (e.g., contact details, user comments) could be inadvertently used for purposes beyond the original intent, leading to potential privacy breaches.

Specific challenges include:

- Data anonymization challenges: Completely anonymizing data scraped from the web is often impossible.

- Potential for bias amplification: AI models trained on biased data will likely perpetuate and amplify those biases.

- Legal frameworks: The legal landscape surrounding data usage for AI training is still evolving, lacking clear guidelines in many jurisdictions.

Strategies for Minimizing Your Contribution to Google's AI Training Data

While completely preventing your content from being used is difficult, you can take proactive steps to minimize the likelihood:

- Strengthening robots.txt: Use more specific and comprehensive directives to restrict access to sensitive areas.

- Strategic use of no-index meta tags: Implement these tags on pages you absolutely do not want indexed.

- Advanced security measures: Employ robust security protocols to make data scraping more difficult.

- Exploring alternative hosting options: Consider hosting solutions that provide enhanced privacy and data protection features.

Conclusion: Reclaiming Control Over Your Web Content and Google's AI

In summary, Google's AI training relies heavily on web content, and current opt-out mechanisms have limited effectiveness. The ethical and privacy implications are substantial. By implementing the strategies outlined above, you can take steps to better protect your data. Learn how to better manage your web content and its use in Google's AI training. Take control of your data today! Understanding the implications of web content and Google's AI: training data despite opt-outs is vital for all website owners.

Featured Posts

-

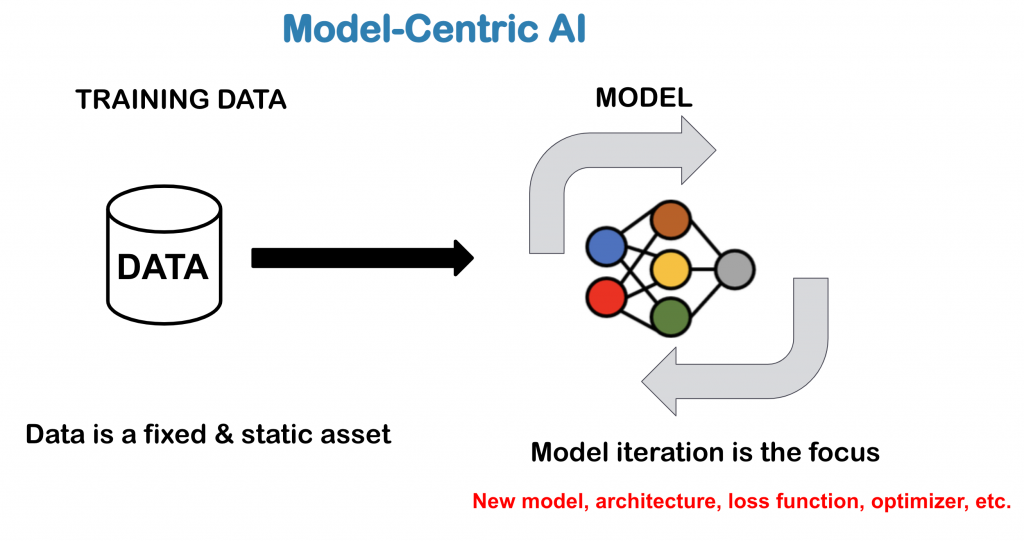

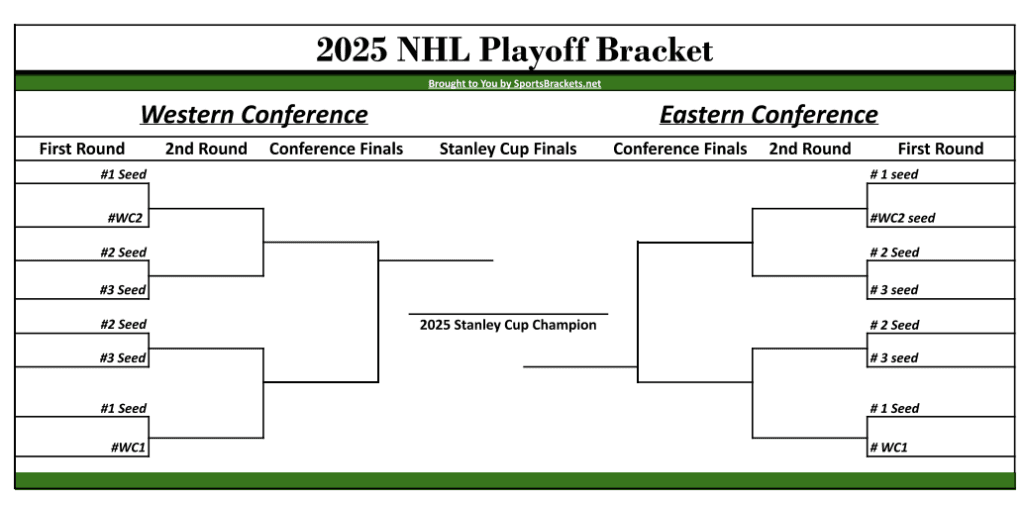

Charles Barkleys Nhl Playoff Predictions Oilers And Leafs Stand Out

May 05, 2025

Charles Barkleys Nhl Playoff Predictions Oilers And Leafs Stand Out

May 05, 2025 -

Ruth Buzzi Dead At 88 Remembering The Iconic Comedian And Laugh In Star

May 05, 2025

Ruth Buzzi Dead At 88 Remembering The Iconic Comedian And Laugh In Star

May 05, 2025 -

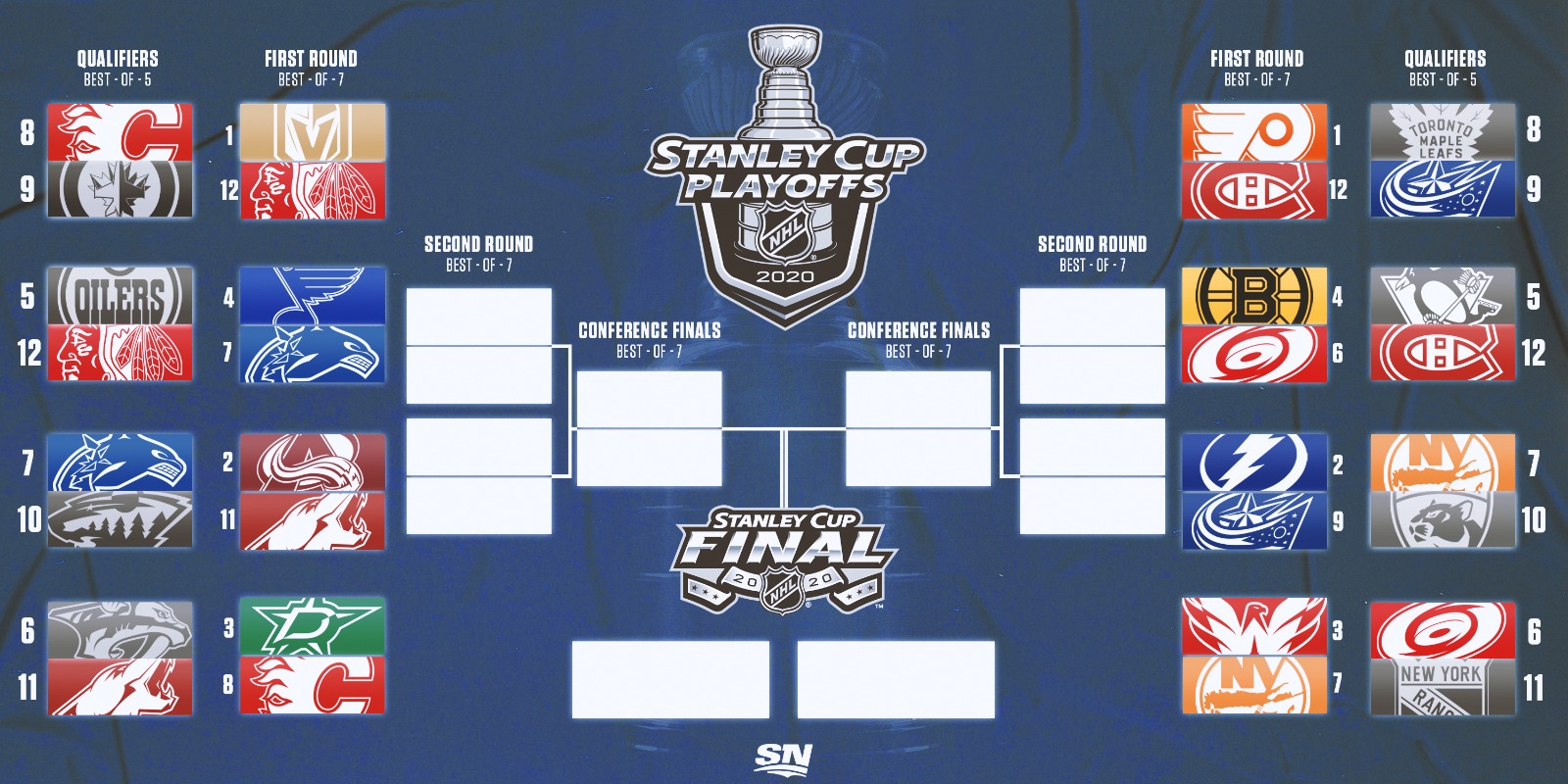

New Parent Max Verstappen Races Into Miami Grand Prix

May 05, 2025

New Parent Max Verstappen Races Into Miami Grand Prix

May 05, 2025 -

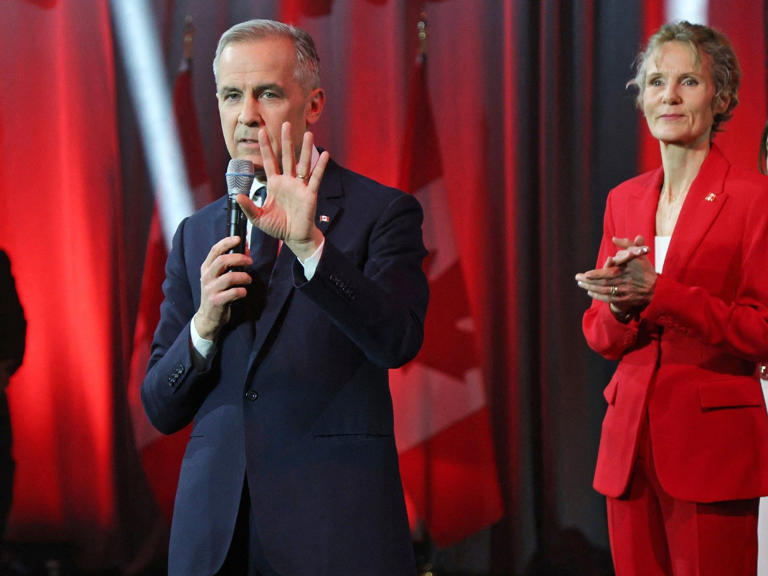

Uk Economy Carneys Bold Plan For Transformation

May 05, 2025

Uk Economy Carneys Bold Plan For Transformation

May 05, 2025 -

How Stefano Domenicalis Leadership Drives Formula 1s Expansion

May 05, 2025

How Stefano Domenicalis Leadership Drives Formula 1s Expansion

May 05, 2025

Latest Posts

-

Will The Oilers Rebound Against The Canadiens A Morning Coffee Preview

May 05, 2025

Will The Oilers Rebound Against The Canadiens A Morning Coffee Preview

May 05, 2025 -

Oilers Vs Canadiens Morning Coffee Predictions And Bounce Back Potential

May 05, 2025

Oilers Vs Canadiens Morning Coffee Predictions And Bounce Back Potential

May 05, 2025 -

Nhl Highlights Avalanche Defeat Panthers Despite Late Comeback

May 05, 2025

Nhl Highlights Avalanche Defeat Panthers Despite Late Comeback

May 05, 2025 -

Johnston And Rantanen Power Avalanche To Victory Over Panthers

May 05, 2025

Johnston And Rantanen Power Avalanche To Victory Over Panthers

May 05, 2025 -

First Round Nhl Playoffs Key Factors And Predictions

May 05, 2025

First Round Nhl Playoffs Key Factors And Predictions

May 05, 2025