AI Doesn't Really Learn: Understanding The Implications For Responsible Use

Table of Contents

The Illusion of Learning: How AI Algorithms Function

The idea that AI "learns" like humans do is a misconception. AI, at its core, excels at identifying patterns in data through complex statistical calculations, not genuine comprehension. This distinction is critical for understanding its capabilities and limitations.

Statistical Pattern Recognition, Not Understanding

AI systems, even those utilizing sophisticated deep learning techniques, primarily perform statistical pattern recognition. They don't possess understanding or consciousness.

- Image recognition: AI identifies objects in images by recognizing patterns of pixels, not by "seeing" and understanding the object as a human would.

- Natural language processing: AI processes text by identifying statistical relationships between words and phrases, not by truly comprehending the meaning or context.

The crucial difference between correlation and causation is often overlooked. AI might identify a correlation between two factors in the data without understanding the underlying causal relationship. This can lead to inaccurate or misleading conclusions.

The Role of Training Data

The performance of any AI system is heavily reliant on the quality and characteristics of its training data. This data acts as the foundation upon which the AI model builds its patterns.

- Bias in training data: If the training data reflects existing societal biases (e.g., gender, racial), the resulting AI system will likely perpetuate and even amplify these biases. For example, facial recognition systems trained primarily on images of white faces often perform poorly on individuals with darker skin tones.

- Data diversity and representativeness: High-quality training data requires diversity and representativeness to ensure the AI system can generalize its learning to a wider population and avoid biased outcomes. Insufficient data diversity can lead to significant inaccuracies and unfairness.

Limitations of Current AI: The "Black Box" Problem

Many complex AI models, especially deep learning neural networks, operate as "black boxes." Their internal decision-making processes are often opaque and difficult to understand.

- Explainable AI (XAI): The field of XAI aims to develop techniques that make the inner workings of AI systems more transparent and interpretable, increasing trust and accountability.

- Challenges in interpreting and debugging: The complexity of these systems makes it challenging to identify and correct errors or biases within the model. Debugging AI can be significantly more complex than debugging traditional software.

Ethical and Societal Implications of Misunderstanding AI "Learning"

The misconception that AI "learns" like humans can lead to several serious ethical and societal challenges.

Bias and Discrimination

Biases present in training data inevitably lead to biased outputs from AI systems. This bias can have far-reaching consequences.

- Loan applications: AI systems used to assess loan applications might discriminate against certain demographic groups if the training data reflects historical lending biases.

- Hiring processes: AI-powered recruitment tools might inadvertently discriminate against certain candidates due to biases in the data used to train them.

Mitigating bias requires careful curation of training data, algorithmic fairness techniques, and ongoing monitoring of AI system outputs.

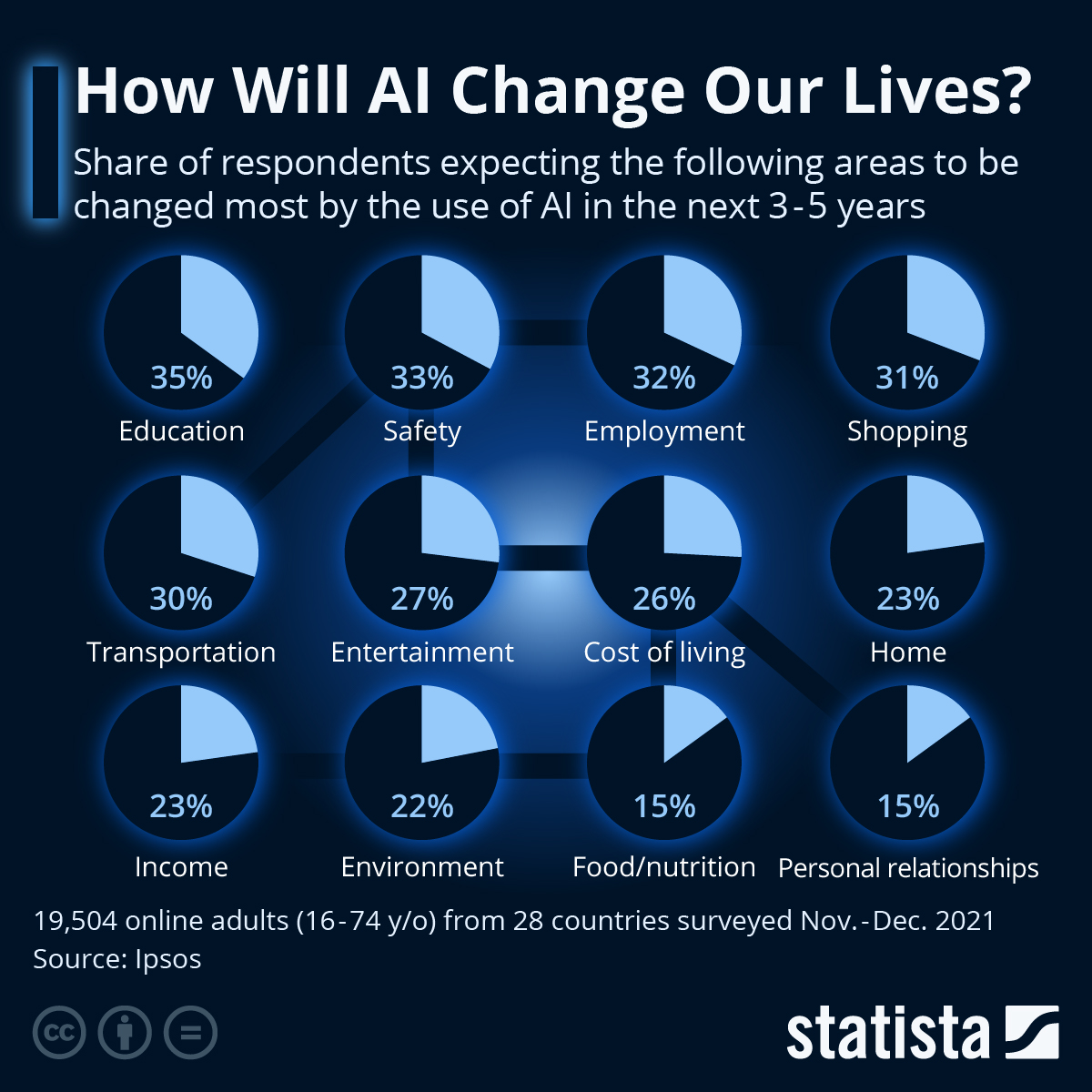

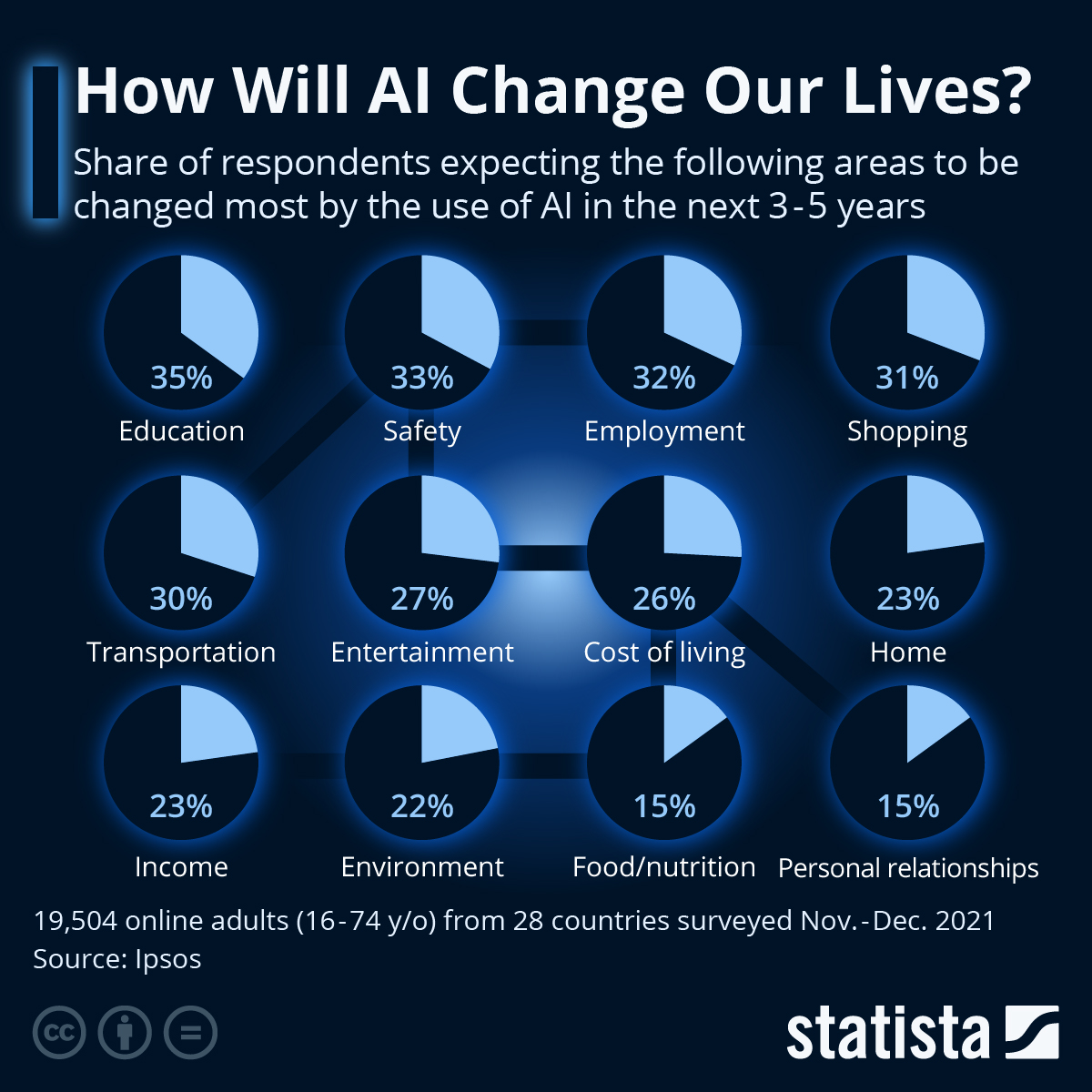

Job Displacement and Economic Inequality

AI-driven automation has the potential to displace workers in various industries, exacerbating existing economic inequalities.

- Manufacturing and transportation: Automation is already impacting jobs in these sectors, leading to job losses and requiring workforce adaptation.

- Customer service: Chatbots and other AI-powered systems are increasingly replacing human customer service representatives.

Addressing this challenge necessitates proactive reskilling and upskilling initiatives to equip workers with the skills needed for the changing job market.

Privacy and Security Concerns

The development and deployment of AI systems often involve the collection and use of vast amounts of personal data, raising significant privacy and security concerns.

- Data privacy regulations: Regulations such as GDPR and CCPA aim to protect individuals' data privacy, but adapting these regulations to the unique challenges posed by AI remains an ongoing process.

- Security vulnerabilities: AI systems themselves can be vulnerable to attacks, potentially leading to data breaches or malicious manipulation of their outputs. Robust cybersecurity measures are essential.

Promoting Responsible AI Development and Deployment

Addressing the ethical and societal implications of AI requires a multi-faceted approach focusing on responsible development and deployment.

Transparency and Explainability

Creating transparent and explainable AI systems is paramount to building trust and ensuring accountability.

- Model interpretability techniques: Developing methods that allow us to understand how an AI model arrives at its conclusions is crucial for identifying and correcting biases.

- Open-source AI development: Promoting open-source development can contribute to greater transparency and scrutiny of AI systems.

Human Oversight and Control

Maintaining human oversight and control over AI systems is crucial to prevent unintended consequences and ensure ethical use.

- Human-in-the-loop systems: Integrating human oversight into the decision-making process of AI systems can mitigate the risks of bias and errors.

- Ethical guidelines and regulations: Developing robust ethical guidelines and regulations for the design, development, and deployment of AI is essential.

Education and Public Awareness

Increased education and public awareness are vital for promoting responsible AI use.

- AI literacy programs: Educating the public about AI's capabilities and limitations is essential to foster informed discussions and responsible adoption.

- Promoting critical thinking about AI: Encouraging critical thinking and responsible engagement with AI technologies is crucial to avoid the pitfalls of hype and misinformation.

Conclusion

Understanding that AI doesn't truly "learn" empowers us to develop and utilize artificial intelligence responsibly. Its reliance on statistical pattern recognition, the impact of biased training data, and the "black box" problem highlight the need for cautious and ethical approaches. Let's work together to ensure that AI serves humanity ethically and beneficially, fostering a future where AI's capabilities are harnessed responsibly and for the common good. The responsible development and deployment of AI requires constant vigilance and a commitment to addressing the implications of its limitations. Let's ensure that "AI doesn't really learn" translates to a commitment to responsible AI practices.

Featured Posts

-

How To Achieve The Good Life Strategies For Wellbeing

May 31, 2025

How To Achieve The Good Life Strategies For Wellbeing

May 31, 2025 -

Solve The Nyt Mini Crossword March 30 2025 Answers And Clues

May 31, 2025

Solve The Nyt Mini Crossword March 30 2025 Answers And Clues

May 31, 2025 -

Receta Rapida De Empanadas De Jamon Y Queso Sin Necesidad De Horno

May 31, 2025

Receta Rapida De Empanadas De Jamon Y Queso Sin Necesidad De Horno

May 31, 2025 -

Banksys Identity Addressing The Woman Conspiracy Theory

May 31, 2025

Banksys Identity Addressing The Woman Conspiracy Theory

May 31, 2025 -

Mel Kiper Jr S Prediction Browns No 2 Overall Draft Pick

May 31, 2025

Mel Kiper Jr S Prediction Browns No 2 Overall Draft Pick

May 31, 2025