AI Therapy And The Erosion Of Privacy In A Police State

Table of Contents

The Allure of AI in Mental Healthcare

The promise of AI in mental healthcare is undeniably appealing. Its proponents point to several key advantages:

Efficiency and Accessibility:

- Wider Reach: AI therapy platforms can reach individuals in remote areas or those with limited access to traditional mental health services, bridging geographical and socioeconomic barriers. This is particularly significant in underserved communities often lacking sufficient mental health professionals.

- 24/7 Availability: Unlike human therapists, AI-powered systems offer immediate support, anytime, anywhere. This constant availability can be crucial during mental health crises.

- Personalized Treatment: AI algorithms can analyze vast datasets to create personalized treatment plans tailored to individual needs and preferences, potentially leading to more effective outcomes. The ability to adapt treatment in real-time based on patient responses is a significant advantage.

However, these advantages come at a cost. The very features that make AI therapy so attractive also create vulnerabilities that could be exploited in the wrong hands.

The Surveillance State and AI Therapy Data

AI therapy platforms collect extensive personal data, far beyond what a traditional therapist might gather. This includes highly sensitive information:

Data Collection and Storage:

- Conversation Transcripts: Every interaction between the user and the AI is recorded, creating a detailed record of their thoughts, feelings, and experiences.

- Emotional Biomarkers: Voice analysis and facial recognition technology can track subtle emotional responses, providing insights into a user's mental state that could be misinterpreted or misused.

- Personal Details and Medical History: Users often provide extensive personal information, including medical history, lifestyle details, and relationships, creating a rich profile ripe for exploitation.

This data is stored digitally, making it vulnerable to hacking, data breaches, and unauthorized access by both internal and external actors. In the hands of a repressive government, this trove of personal information becomes a potent weapon.

Potential for Abuse in a Police State

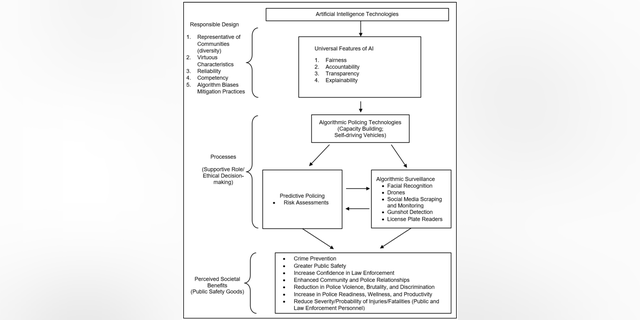

The potential for abuse of AI therapy data in a police state is deeply concerning. Government agencies could utilize this technology for surveillance and repression in several ways:

Weaponizing Mental Health Data:

- Identifying Dissidents: Emotional patterns revealed during therapy sessions could be used to identify individuals expressing dissent or discontent with the regime.

- Predictive Policing: AI algorithms, potentially biased, could be used to predict and preemptively target individuals deemed “at risk” based on their mental health data, leading to arbitrary arrests and detentions.

- Intimidation and Control: Sensitive mental health information could be used to blackmail or intimidate individuals, suppressing dissent and maintaining control.

Algorithmic Bias and Discrimination

AI algorithms are not neutral; they inherit the biases present in the data they are trained on. This can lead to unfair targeting of marginalized groups:

Unfair Targeting of Marginalized Groups:

- Biased Training Data: If the data used to train AI algorithms reflects societal prejudices, the algorithms will perpetuate and amplify those biases.

- Lack of Diversity: A lack of diversity in AI development teams can lead to blind spots and unintended consequences, resulting in discriminatory outcomes.

- Disproportionate Impact: Marginalized communities, already facing systemic disadvantages, could be disproportionately targeted and profiled based on biased AI systems.

The Need for Robust Data Protection and Regulation

To mitigate the risks outlined above, we urgently need robust data protection laws and ethical guidelines:

Protecting Digital Rights and Privacy:

- Data Encryption and Anonymization: Implementing strong encryption and anonymization techniques is crucial to protect the confidentiality of sensitive mental health data.

- Independent Audits: Regular independent audits of AI therapy platforms are necessary to ensure compliance with data protection regulations and ethical guidelines.

- Strict Regulations on Data Sharing and Access: Strict regulations are needed to control data sharing and access, limiting access to authorized personnel only, under strict conditions.

- User Consent and Control: Users must have full control and consent over their data, including the right to access, correct, and delete their information.

International collaboration and advocacy for digital rights are essential to navigate the complex ethical challenges posed by AI therapy.

Conclusion:

The potential benefits of AI therapy are undeniable, but its misuse in a police state presents a grave threat to privacy and human rights. The collection and analysis of sensitive mental health data create vulnerabilities that could be exploited to suppress dissent, target marginalized communities, and maintain authoritarian control. We must advocate for strong data protection laws, increased transparency in AI development, and the establishment of ethical guidelines to ensure that AI-powered therapy remains a tool for healing, not repression. Let's demand responsible AI therapy development and protect individual privacy in the age of advanced technology; failure to do so risks creating a dystopian police state where even our innermost thoughts are subject to surveillance. We must champion ethical AI therapy and safeguard our digital rights.

Featured Posts

-

Open Ai Facing Ftc Investigation A Deep Dive Into Chat Gpts Potential Risks

May 16, 2025

Open Ai Facing Ftc Investigation A Deep Dive Into Chat Gpts Potential Risks

May 16, 2025 -

Joe And Jill Bidens Post White House Debut A First Look

May 16, 2025

Joe And Jill Bidens Post White House Debut A First Look

May 16, 2025 -

Steams Free Game Offering Worth The Download

May 16, 2025

Steams Free Game Offering Worth The Download

May 16, 2025 -

Ufc 314 Paddy Pimbletts Fight And His Pursuit Of A Championship

May 16, 2025

Ufc 314 Paddy Pimbletts Fight And His Pursuit Of A Championship

May 16, 2025 -

Analisis Del Partido Olimpia Vence A Penarol 2 0

May 16, 2025

Analisis Del Partido Olimpia Vence A Penarol 2 0

May 16, 2025