Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

The Role of Algorithmic Recommendation Systems

Algorithmic recommendation systems, designed to personalize user experiences, are inadvertently creating breeding grounds for extremist ideologies. These systems, while seemingly benign, contribute significantly to the problem of algorithmic radicalization.

Echo Chambers and Filter Bubbles

Algorithms often prioritize content that aligns with a user's past behavior, creating echo chambers and filter bubbles. This means users are primarily exposed to information confirming their existing beliefs, even if those beliefs are extremist.

- Examples: YouTube's recommendation algorithm has been criticized for pushing users down rabbit holes of increasingly extreme content. Similarly, social media newsfeeds often amplify biased or inflammatory material.

- Lack of Content Moderation: Insufficient content moderation allows extremist content to flourish, further reinforcing radical views within these echo chambers.

- Reinforcement of Biases: Algorithms can inadvertently reinforce existing biases, making users more susceptible to extremist propaganda and conspiracy theories.

The Spread of Misinformation and Disinformation

Algorithms dramatically accelerate the spread of misinformation and disinformation related to extremist ideologies. This rapid dissemination can quickly radicalize vulnerable individuals.

- Examples: Fake news articles, conspiracy theories, and propaganda related to white supremacy, anti-Semitism, and other extremist groups readily circulate through algorithmic amplification.

- Difficulty in Detection and Removal: The sheer volume of content and the speed at which it spreads make it incredibly challenging for tech companies to effectively detect and remove harmful material.

- Impact on Vulnerable Individuals: Individuals already experiencing feelings of isolation, anger, or alienation are particularly vulnerable to the manipulative tactics employed in extremist online communities.

The Legal and Ethical Responsibilities of Tech Companies

The question of tech company responsibility in algorithmic radicalization sparks intense debate, particularly concerning legal frameworks and ethical obligations.

Section 230 and its Limitations

Section 230 of the Communications Decency Act in the US (and similar laws in other countries) provides significant legal protection to tech companies from liability for user-generated content. However, its applicability in the context of algorithmic radicalization is hotly contested.

- Arguments for Reform: Critics argue that Section 230 shields tech companies from accountability when their algorithms contribute to the spread of harmful content, enabling algorithmic radicalization.

- Arguments Against Reform: Proponents of Section 230 argue that altering it could stifle free speech and innovation. The challenges of content moderation at scale are immense.

- Challenges of Content Moderation at Scale: The sheer volume of content uploaded daily makes comprehensive human moderation practically impossible, highlighting the limitations of current systems.

Ethical Obligations Beyond Legal Requirements

Beyond legal requirements, tech companies have a strong ethical duty of care towards their users. This includes actively working to prevent the harm caused by algorithmic radicalization.

- Arguments for Proactive Measures: Tech companies should proactively design algorithms that minimize the spread of harmful content and prioritize user safety.

- Importance of Ethical Algorithm Design and Responsible AI: Developing ethical guidelines for algorithm design and implementing responsible AI practices are crucial for mitigating the risks of algorithmic radicalization.

- Role of Independent Oversight Bodies: Independent oversight bodies could provide accountability and transparency, ensuring tech companies adhere to ethical standards in algorithm design and content moderation.

The Challenges of Moderation and Prevention

Effectively moderating content and preventing online radicalization presents immense difficulties for tech companies.

Scale and Speed of Online Radicalization

The scale and speed at which extremist content is created and shared online overwhelm current moderation systems.

- Limitations of Human Moderation: Manually reviewing every piece of content is simply not feasible.

- Challenges of AI-Powered Moderation: AI moderation systems struggle to accurately identify subtle forms of extremist content, leading to both false positives and false negatives.

- Constant Evolution of Extremist Tactics: Extremist groups constantly adapt their tactics to circumvent content moderation efforts, creating an ongoing "arms race".

Protecting Free Speech vs. Preventing Harm

Balancing the protection of free speech with the prevention of harm is a delicate and complex challenge.

- Importance of Nuanced Approaches to Content Moderation: Tech companies need nuanced approaches that distinguish between legitimate expression and harmful content promoting violence.

- Balancing Competing Values: Finding the right balance between these competing values requires careful consideration of ethical principles, legal frameworks, and the potential consequences of both over- and under-moderation.

- Potential Solutions: Improved algorithms, user education, stronger community standards, and collaboration between tech companies, law enforcement, and researchers are essential for effective content moderation.

Conclusion

The question of whether tech companies are responsible when algorithms radicalize mass shooters is multifaceted and deeply concerning. The complexities of algorithmic recommendation systems, the limitations of legal frameworks like Section 230, and the immense challenges of content moderation at scale all contribute to the difficulty of finding solutions. However, the ethical imperative for tech companies to prioritize user safety and actively mitigate the risk of algorithmic radicalization remains undeniable. Continued discussion, policy reform, and increased accountability are vital to mitigating this dangerous trend. Let's demand better from tech companies and work towards a safer online environment by addressing the issue of algorithmic radicalization head-on.

Featured Posts

-

Is Banksy A Woman Debunking The Conspiracy

May 31, 2025

Is Banksy A Woman Debunking The Conspiracy

May 31, 2025 -

Unutulmaz Bir Ask Hikayesi Guelsen Bubikoglu Ve Tuerker Inanoglu

May 31, 2025

Unutulmaz Bir Ask Hikayesi Guelsen Bubikoglu Ve Tuerker Inanoglu

May 31, 2025 -

Delaying Ecb Rate Cuts Economists Sound The Alarm

May 31, 2025

Delaying Ecb Rate Cuts Economists Sound The Alarm

May 31, 2025 -

Munguia Faces Doping Scandal Denial After Positive Test

May 31, 2025

Munguia Faces Doping Scandal Denial After Positive Test

May 31, 2025 -

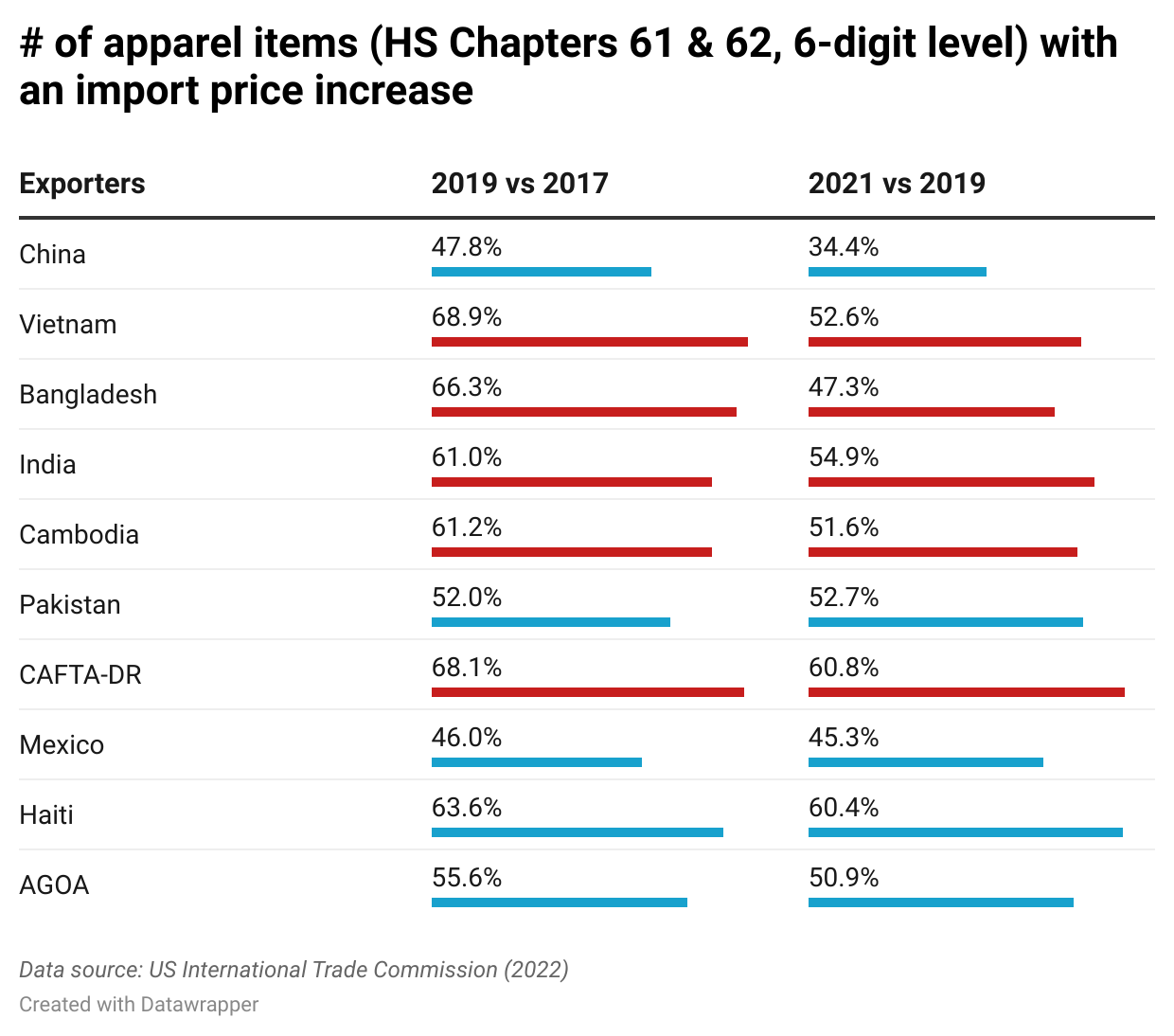

Tariff Truce Sustaining Us China Trade Across The Pacific

May 31, 2025

Tariff Truce Sustaining Us China Trade Across The Pacific

May 31, 2025