Scarlett Johansson On AI: Lack Of Consent And The Future Of Voice Cloning Technology

Table of Contents

Scarlett Johansson's Stance on AI Voice Cloning and its Exploitation

Scarlett Johansson, like many other celebrities, has become a victim of AI voice cloning's misuse. Her voice, a recognizable and valuable asset, has been illegally replicated and used in various contexts without her consent. This unauthorized use constitutes a significant breach of privacy and raises serious ethical questions about the ownership and control of one's own voice.

- Specific instances of unauthorized voice cloning involving Johansson: While specific instances might not be publicly documented due to legal reasons, the general practice of unauthorized celebrity voice cloning is widespread and readily available. Numerous examples exist online, though many are quickly taken down upon discovery.

- Johansson's advocacy for stronger regulations: While Johansson hasn't publicly launched a major campaign, her comments highlight a growing need for stricter regulations within the industry. Her implicit advocacy reflects the shared concerns of many celebrities grappling with this emerging technology.

- Impact on her career and public image: The unauthorized use of her voice could potentially damage her brand reputation, erode public trust, and lead to significant financial losses. The potential for malicious deepfakes further exacerbates this risk.

The Ethical Implications of Voice Cloning without Consent

The ethical concerns extend far beyond celebrity voices. The ease with which AI voice cloning technology can be used raises serious questions about:

- Privacy violation: The unauthorized recording and replication of someone's voice is a profound invasion of privacy, potentially leading to identity theft and reputational damage.

- Identity theft: Criminals could use cloned voices for financial scams, impersonating individuals to access accounts or obtain sensitive information.

- Fraud and deepfakes: Deepfake audio, generated using AI voice cloning, can be used to create convincing fake conversations, spread misinformation, and damage reputations.

Bullet points:

- Examples of potential misuse: Imagine a deepfake audio recording used to falsely implicate someone in a crime, or a cloned voice used to manipulate financial transactions. The possibilities are limitless and deeply concerning.

- Lack of legal frameworks: Current legal systems are struggling to keep pace with these rapid technological advancements, leaving individuals vulnerable to exploitation.

- Difficulty in detecting deepfakes: The sophistication of AI voice cloning makes detecting deepfakes incredibly challenging, requiring specialized software and expertise.

Technological Advancements and the Growing Need for Regulation

The rapid progress in AI voice cloning technology is alarming. Affordable and user-friendly software is increasingly accessible, lowering the barrier to entry for malicious actors.

Bullet points:

- Readily available voice cloning software: Several software programs and online services offer voice cloning capabilities, some requiring minimal technical expertise.

- Current legislation (or lack thereof): Many jurisdictions lack specific legislation addressing the ethical and legal implications of AI voice cloning. Existing laws struggle to adequately address the unique challenges posed by this technology.

- Suggestions for improved regulations: Stricter regulations are needed, encompassing consent requirements, robust verification methods, and clear legal frameworks to hold perpetrators accountable. This includes potential digital watermarks for authentic voice recordings.

The Future of AI Voice Cloning: Striking a Balance Between Innovation and Ethical Considerations

The future of AI voice cloning hinges on striking a balance between technological innovation and ethical considerations. We need to focus on responsible development and implementation.

Bullet points:

- Technological solutions for detecting deepfakes: Research into robust deepfake detection technology is crucial, focusing on identifying subtle inconsistencies and anomalies in cloned audio.

- Industry self-regulation and ethical guidelines: Industry bodies and developers must establish and enforce ethical guidelines for the development and use of AI voice cloning technology.

- Public awareness and education: Raising public awareness about the risks associated with AI voice cloning is essential in promoting responsible use and preventing malicious exploitation.

Conclusion: The Importance of Consent in the Age of AI Voice Cloning – Protecting Our Voices

Scarlett Johansson's experience underscores a crucial point: the unauthorized use of AI voice cloning technology is a serious ethical and legal issue. The potential for misuse – from fraud and identity theft to the spread of misinformation – necessitates immediate and decisive action. We must establish robust regulatory frameworks that prioritize consent, transparency, and accountability. Technological solutions, coupled with strong industry self-regulation and public education, are critical in navigating this complex landscape. Let's work together to ensure responsible development and use of AI voice cloning technology, prioritizing consent and ethical considerations above all else. We need to protect our voices in the digital age.

Featured Posts

-

New York Islanders Secure No 1 Nhl Draft Pick

May 13, 2025

New York Islanders Secure No 1 Nhl Draft Pick

May 13, 2025 -

Gaza Hostage Situation A Nightmare For Families

May 13, 2025

Gaza Hostage Situation A Nightmare For Families

May 13, 2025 -

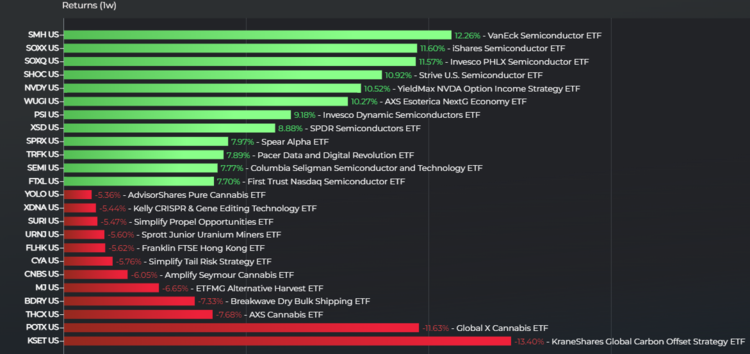

Leveraged Semiconductor Etfs A Pre Surge Sell Off Explained

May 13, 2025

Leveraged Semiconductor Etfs A Pre Surge Sell Off Explained

May 13, 2025 -

Analysis Philippine Midterm Elections Dutertes Impact On Marcoss Campaign

May 13, 2025

Analysis Philippine Midterm Elections Dutertes Impact On Marcoss Campaign

May 13, 2025 -

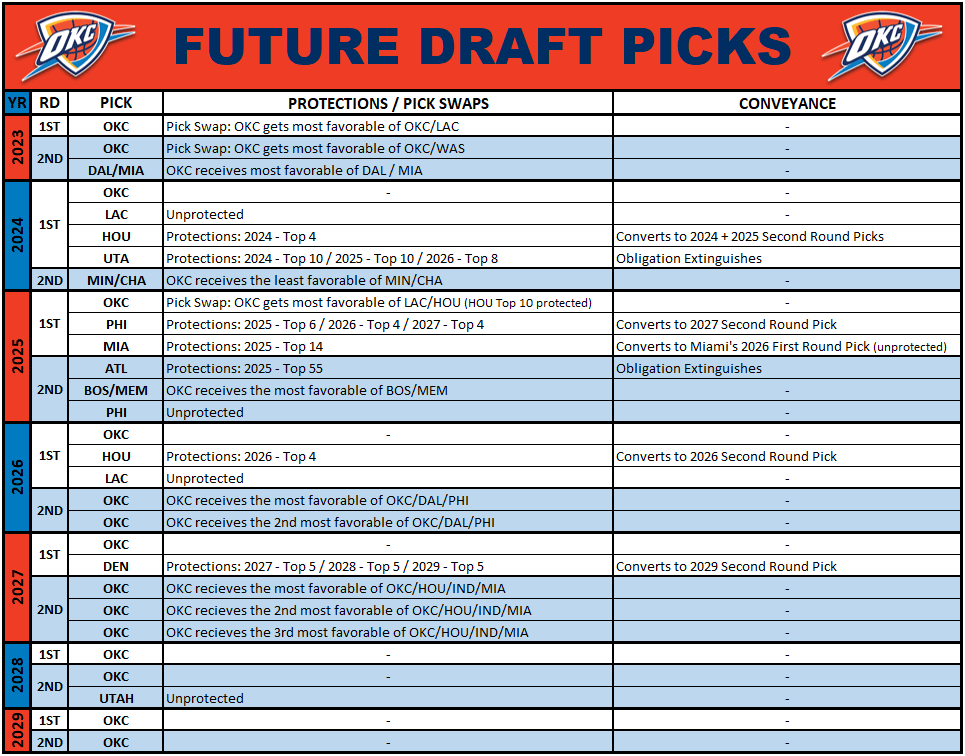

Thunder Draft Positioning Remains Uncertain Post Season

May 13, 2025

Thunder Draft Positioning Remains Uncertain Post Season

May 13, 2025