Johansson Vs. OpenAI: The Debate Over AI Voice Cloning And Consent

Table of Contents

The Scarlett Johansson Case: A Turning Point in the AI Voice Cloning Debate

While the specifics of the alleged unauthorized use of Scarlett Johansson's voice by OpenAI remain somewhat opaque due to a lack of public legal filings, the situation serves as a crucial case study. The controversy ignited a public conversation about the lack of regulatory frameworks surrounding AI voice cloning and the potential for exploitation. The core issue revolves around the use of a celebrity's voice – a unique and valuable asset – without their knowledge or permission.

-

What specific claims were made by Johansson (or her representatives)? While no official lawsuit has been made public, reports suggest concerns around the unauthorized use of her voice data for training OpenAI's AI models. This raises concerns about potential commercial exploitation and the violation of her likeness rights.

-

What was OpenAI's response? OpenAI has not publicly addressed specific allegations relating to Scarlett Johansson. However, the situation underscores the need for greater transparency and robust consent mechanisms within the AI development process.

-

What are the potential legal ramifications of this case? This case could set a significant legal precedent, potentially leading to new laws and regulations governing the use of personal data, including voice data, in the training of AI models. Existing laws regarding intellectual property and right of publicity could be interpreted in novel ways in the context of AI voice cloning.

The Ethical Implications of AI Voice Cloning without Consent

The ethical implications of AI voice cloning without consent are profound and far-reaching. The technology allows for the creation of incredibly realistic "deepfake" voices, capable of impersonating individuals convincingly. This raises serious concerns about the erosion of personal identity and the potential for widespread deception.

-

Explain the concept of "deepfake" voices and their potential for malicious use (e.g., fraud, impersonation). Deepfake voices leverage AI algorithms to synthesize audio that closely mimics a person's speech patterns, intonation, and even emotional nuances. This capability can be used for malicious purposes, including financial fraud (e.g., convincing someone to transfer money), identity theft, and political disinformation.

-

Discuss the psychological impact on individuals whose voices are cloned without permission. Imagine having your voice used to spread misinformation, endorse products you oppose, or even to impersonate you in a compromising situation. This can cause significant emotional distress, damage reputation, and lead to feelings of violation and powerlessness.

-

Explore the vulnerability of marginalized groups to voice cloning abuse. Marginalized groups, already facing discrimination and social inequalities, are particularly vulnerable to voice cloning abuse. Their voices might be used to spread harmful stereotypes, incite hatred, or perpetuate injustice.

The Blurred Lines of Intellectual Property Rights in AI Voice Cloning

The legal landscape surrounding voice cloning is complex and largely uncharted. Current copyright laws primarily address written and artistic works, leaving the ownership and control of one's voice in a legal grey area.

-

Discuss existing copyright laws and their applicability to AI-generated voice clones. Existing copyright laws struggle to encompass the unique challenges presented by AI-generated voice clones. The question of who owns the copyright – the individual whose voice was cloned, the AI developer, or perhaps neither – remains unresolved.

-

Explore the need for new legislation specifically addressing AI voice cloning. New legislation is urgently needed to clarify intellectual property rights related to AI voice cloning, establishing clear guidelines on consent, ownership, and permissible use.

-

Consider the challenges in enforcing intellectual property rights in a decentralized digital world. Enforcing intellectual property rights in the digital realm is notoriously difficult, and this challenge is amplified by the ease with which AI voice clones can be created and disseminated online.

The Role of AI Companies in Protecting User Consent and Privacy

AI companies like OpenAI bear a significant responsibility in ensuring the ethical development and deployment of AI voice cloning technology. Their actions have far-reaching consequences for individual rights and societal well-being.

-

Examine OpenAI's (and other companies') current policies regarding data usage and consent. Many AI companies are still developing their policies regarding data usage and consent in the context of AI voice cloning. Increased transparency and detailed information about data collection and usage practices are needed.

-

Discuss the need for stronger transparency and accountability measures. AI companies should implement stronger transparency measures, clearly outlining how user data is collected, used, and protected. Accountability mechanisms are also crucial to address potential misuse of the technology.

-

Explore the role of ethical guidelines and industry self-regulation. Industry self-regulation, complemented by the development of ethical guidelines, could play an important role in promoting responsible innovation and preventing the misuse of AI voice cloning.

The Future of AI Voice Cloning: Balancing Innovation with Ethical Considerations

The future of AI voice cloning depends on our ability to balance innovation with ethical considerations. Responsible practices and robust solutions are crucial to mitigate the risks associated with this powerful technology.

-

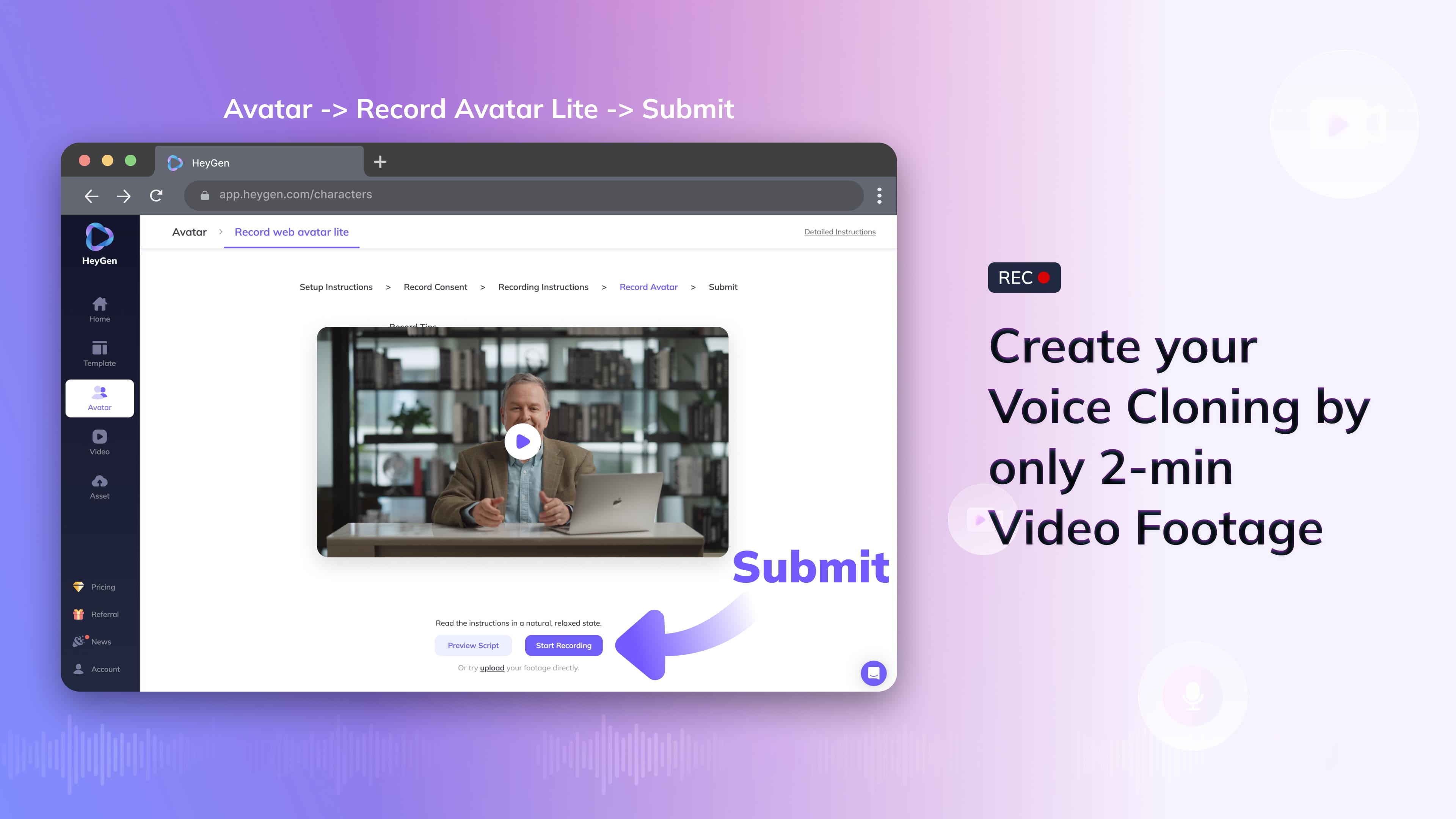

Explore the potential of biometric voice authentication to prevent unauthorized cloning. Biometric voice authentication systems can be used to verify the identity of individuals, potentially preventing unauthorized cloning and misuse of their voices.

-

Discuss the development of consent mechanisms that are robust and easy to understand. Clear, unambiguous consent mechanisms are needed to ensure that individuals have full control over how their voices are used in the context of AI.

-

Consider the role of public education and awareness in shaping the responsible use of this technology. Public awareness and education campaigns can play a crucial role in shaping responsible use of this technology. Individuals need to understand the risks and benefits, empowering them to make informed decisions.

Conclusion

The Johansson vs. OpenAI debate underscores the urgent need for careful consideration of the ethical and legal implications surrounding AI voice cloning. The lack of explicit consent poses significant risks to individual privacy, intellectual property, and psychological well-being. Stronger regulations, robust industry self-regulation, and widespread public awareness are crucial in ensuring the responsible development and use of this powerful technology.

Call to Action: The future of AI voice cloning hinges on a collective commitment to ethical development. Let's engage in a thoughtful discussion about AI voice cloning and consent to shape a future where innovation is balanced with responsible use. Join the conversation and help us navigate the complexities of AI voice cloning and consent.

Featured Posts

-

Strength In Captivity A Fathers Message To His Son

May 13, 2025

Strength In Captivity A Fathers Message To His Son

May 13, 2025 -

Pieterburen Seal Rescue Center Closes After 50 Years Final Seals Released

May 13, 2025

Pieterburen Seal Rescue Center Closes After 50 Years Final Seals Released

May 13, 2025 -

Fords Fading Legacy Byds Electric Vehicle Expansion In Brazil

May 13, 2025

Fords Fading Legacy Byds Electric Vehicle Expansion In Brazil

May 13, 2025 -

Luchshie Boeviki S Dzherardom Batlerom Podborka Filmov

May 13, 2025

Luchshie Boeviki S Dzherardom Batlerom Podborka Filmov

May 13, 2025 -

Los Angeles Dodgers Set To Compete For Next Major League Baseball Free Agent

May 13, 2025

Los Angeles Dodgers Set To Compete For Next Major League Baseball Free Agent

May 13, 2025

Latest Posts

-

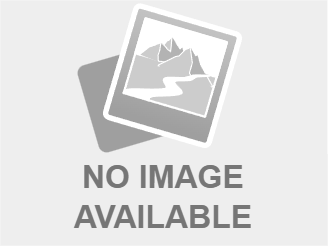

Analyzing The Walleye Credit Cut Realigning Commodities Teams With Core Group Priorities

May 13, 2025

Analyzing The Walleye Credit Cut Realigning Commodities Teams With Core Group Priorities

May 13, 2025 -

The Impact Of Walleye Credit Cuts On Commodities Teams And Core Client Groups

May 13, 2025

The Impact Of Walleye Credit Cuts On Commodities Teams And Core Client Groups

May 13, 2025 -

Walleye Credit Reduction Restructuring Commodities Teams For Core Group Success

May 13, 2025

Walleye Credit Reduction Restructuring Commodities Teams For Core Group Success

May 13, 2025 -

Optimizing Commodities Team Efficiency A Focus On Core Groups Following Walleye Credit Cuts

May 13, 2025

Optimizing Commodities Team Efficiency A Focus On Core Groups Following Walleye Credit Cuts

May 13, 2025 -

Strategic Credit Adjustments How Walleye Impacts Commodities Teams Core Group Focus

May 13, 2025

Strategic Credit Adjustments How Walleye Impacts Commodities Teams Core Group Focus

May 13, 2025